Tabular Reinforcement Learning

Overview

Bridge from exact planning to learning in unknown MDPs. Keep the exploration logic, convergence preconditions, and where optimism pays off.

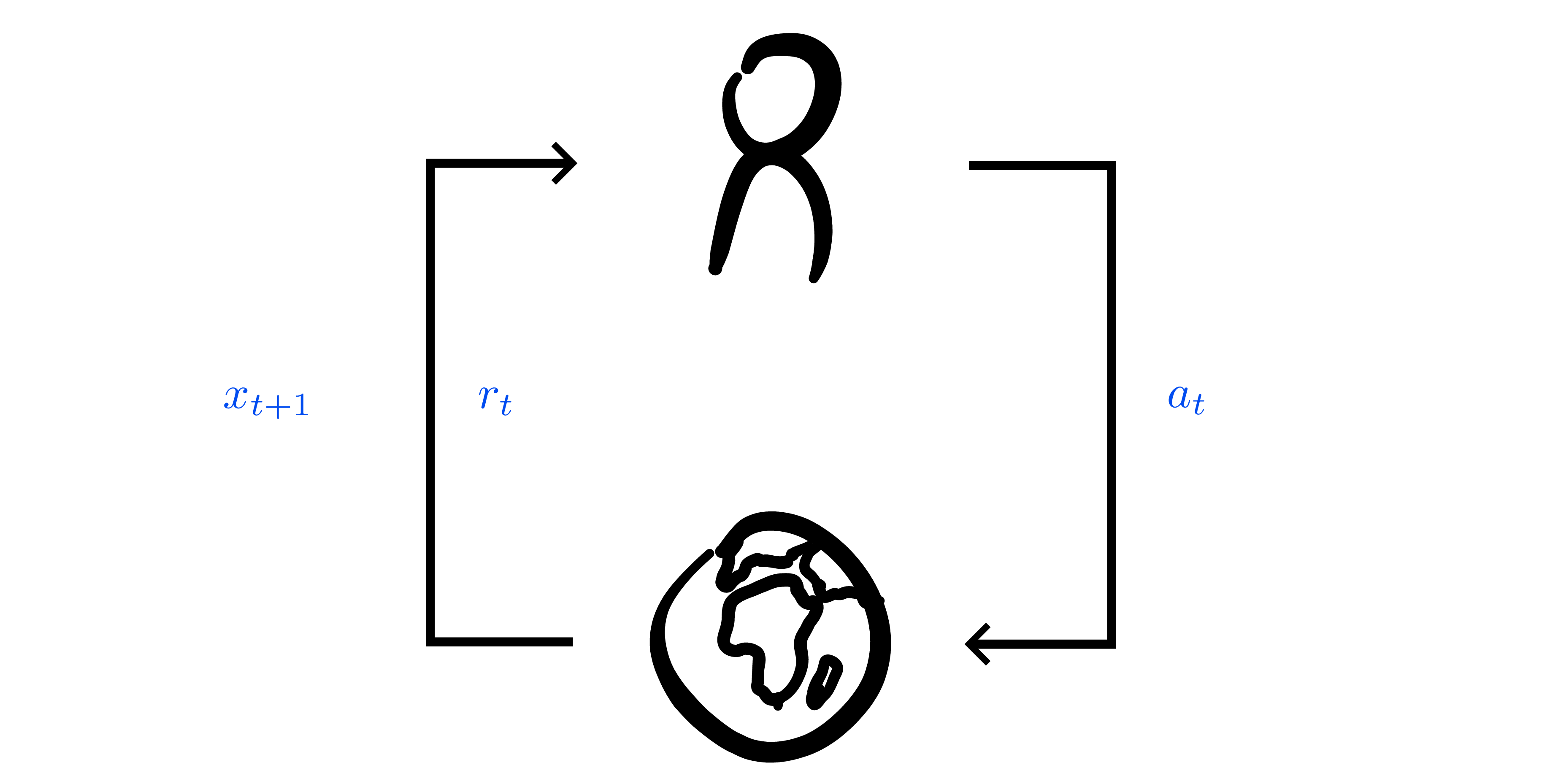

Data model and independence

- Trajectories \(\tau=(x_0,a_0,r_0,x_1,\dots)\) with transitions drawn from unknown \(p\) and \(r\).

- Conditional independence (Eq. 11.2): repeated visits to the same \((x,a)\) give IID samples of \(p(\cdot\mid x,a)\) and \(r(x,a)\) ⇒ Hoeffding + LLN applicable per state-action.

- Objective: learn policy maximizing discounted return \(G_0\) without prior model access.

Model-based tabular control

- Empirical model \(\hat{p}(x'\mid x,a)=N(x,a,x')/N(x,a)\), \(\hat{r}(x,a)=\sum r/N(x,a)\).

- Monte Carlo control: alternate policy evaluation (episode rollouts) and greedy improvement. Exploration via \(\varepsilon\)-greedy with Robbins–Monro schedule (\(\sum \varepsilon_t=\infty\), \(\sum \varepsilon_t^2<\infty\)) ⇒ GLIE ⇒ almost sure convergence to \(\pi^*\). Softmax/Boltzmann exploration uses Gibbs distribution \(\pi_\lambda(a\mid x)\propto e^{Q(x,a)/\lambda}\) for value-directed exploration.

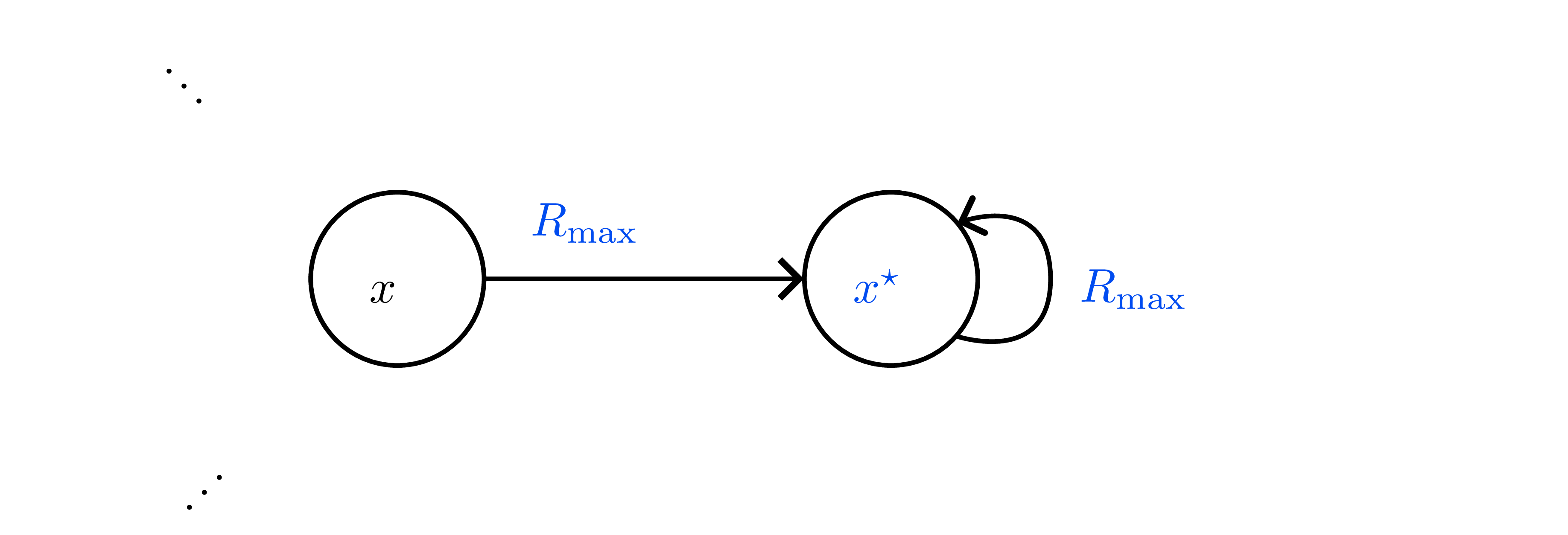

- Optimism (\(R_{\max}\)): initialize unknown \((x,a)\) as transitioning to fairy-tale state \(x_s\) with reward \(R_{\max}\) (see below). After \(N(x,a)\) exceeds Hoeffding threshold \(\frac{R_{\max}^2}{2\epsilon^2}\log\frac{2}{\delta}\), replace with empirical model. Guarantees \(\epsilon\)-optimal policy in poly(\(|\mathcal{X}|,|\mathcal{A}|,1/\epsilon,1/\delta\)) steps; chart highlights how bonus intervals shrink with data.

- Cost: storing dense model \(\mathcal{O}(|\mathcal{X}|^2|\mathcal{A}|)\) + repeatedly solving MDP (policy/value iteration).

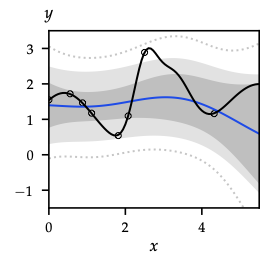

Model-free prediction

- Monte Carlo evaluation: first-visit / every-visit returns; unbiased but high variance and episode-length dependent.

- TD(0): \(V(x) \leftarrow V(x)+\alpha_t\delta_t\) with \(\delta_t=r+\gamma V(x')-V(x)\). Converges almost surely if each state visited infinitely often and \(\alpha_t\) satisfies RM. Generalizes to TD(\(\lambda\)) by using eligibility traces.

On-policy control

- SARSA: \(Q(x,a) \leftarrow Q(x,a)+\alpha_t\big(r + \gamma Q(x',a') - Q(x,a)\big)\) where \((x',a')\) drawn from behavior policy. With GLIE exploration and RM step sizes, \(Q\to q^{\pi^*}\) (Theorem 11.4).

- Incremental policy-iteration view: evaluate with SARSA, improve greedily (still need exploration noise to avoid collapse).

- Actor interpretation: SARSA TD-error \(\delta_t\) is the advantage signal later reused in actor-critic.

Off-policy evaluation and control

- Off-policy TD: \(Q(x,a) \leftarrow Q(x,a)+\alpha_t\big(r + \gamma \sum_{a'}\pi(a'\mid x')Q(x',a') - Q(x,a)\big)\) for arbitrary behavior. Enables reuse of logged data.

- Q-learning: \(Q(x,a) \leftarrow Q(x,a)+\alpha_t\big(r + \gamma \max_{a'}Q(x',a') - Q(x,a)\big)\).

- Requires all \((x,a)\) sampled infinitely often; \(\alpha_t\) with RM ⇒ almost sure convergence.

- Sample complexity (Even-Dar & Mansour 2003): poly in \(\log |\mathcal{X}|,\log |\mathcal{A}|,1/\epsilon,\log(1/\delta)\).

- Optimistic initialization or \(R_{\max}\)-style bonuses accelerate exploration (precursor to UCB-like deep RL bonuses).

- Coverage assumptions foreshadow importance-sampling corrections later.

Step-size schedules & GLIE condition

- Robbins–Monro: \(\alpha_t\ge 0\), \(\sum_t\alpha_t=\infty\), \(\sum_t\alpha_t^2<\infty\) (e.g. \(1/t\), \(1/(1+N_t(x,a))\)).

- GLIE (Definition 11.2): ensures infinite exploration + greedy limit, critical for convergence of Monte Carlo control, SARSA, Q-learning.

Exploration heuristics summary

- \(\varepsilon\)-greedy: uniform randomization baseline; decays to zero while keeping \(\sum \varepsilon_t = \infty\).

- Softmax / Boltzmann: temperature-driven action weighting.

- Optimism (R\(_{\max}\), optimistic Q-init): treat unknown as high-value until proven otherwise.

- These strategies foreshadow exploration bonuses, entropy regularization, and max-entropy RL.

When to choose which approach

- Model-based tabular (with optimistic bonuses) wins on sample efficiency for small MDPs; expensive in memory/compute.

- TD/SARSA: incremental, low memory; need on-policy data and exploration schedule.

- Q-learning: off-policy, reuses experience replay later (deep RL); sensitive to coverage, step sizes.

Remember: all proofs hinge on (i) every \((x,a)\) visited infinitely often, and (ii) step sizes satisfying RM. These assumptions collapse once we approximate or restrict data, motivating the heavier machinery in Chapters 12–13.