Neural Network Basics

Biological Motivation

The architecture of artificial neural networks is loosely inspired by biological neural systems.

- Signal Reception and Integration: In biological neurons, dendrites receive chemical signals from other neurons. These signals are integrated in the cell body (soma).

- Thresholding and Activation: If the integrated signal surpasses a certain threshold, the neuron “fires,” generating an action potential. This thresholding mechanism introduces non-linearity into the system, as the neuron only transmits a signal if the input excitation is sufficient.

- Signal Transmission: The action potential travels down the axon to the axon terminals.

- Synaptic Transmission: At the axon terminals, neurotransmitters are released into the synapse (the junction between two neurons), which are then received by the dendrites of a subsequent neuron, continuing the signal propagation.

Perceptron

The Perceptron, introduced by Frank Rosenblatt in 1958, is one of the earliest and simplest types of artificial neural networks, functioning as a linear classifier.

- Definition: For an input vector \(x\), the Perceptron computes an output \(y\) based on weights \(w\) and a bias \(b\). The output is typically determined by whether the weighted sum \(w^T x + b\) exceeds a threshold (often 0): \[ y = \begin{cases} 1 & \text{if } w^T x + b > 0 \\ 0 & \text{otherwise} \end{cases} \] This can be seen as a simple linear classifier.

- Training:

- The Perceptron is typically trained using a form of gradient descent, specifically the Perceptron learning rule, which is a type of stochastic gradient descent.

- Early Perceptron models used a step activation function, resulting in binary outputs (e.g., 0 or 1, or -1 and 1). The learning rule updates weights based on misclassifications.

- Capabilities and Limitations:

- If a dataset is linearly separable, the Perceptron convergence theorem guarantees that the Perceptron learning algorithm will find a separating hyperplane in a finite number of steps.

- However, the Perceptron does not necessarily find an optimal separating hyperplane (e.g., one that maximizes the margin, as Support Vector Machines do). It simply finds a solution.

- If the data is not linearly separable, the basic Perceptron algorithm may not converge.

- Modern Context: Modern neural networks build upon these foundational concepts by employing multiple layers of neurons (forming “deep” networks) and using smooth, differentiable activation functions instead of the Heaviside step function. This allows for the learning of more complex, non-linear decision boundaries and the use of more sophisticated gradient-based optimization algorithms like backpropagation.

Maximum Likelihood Estimation (MLE)

Maximum Likelihood Estimation (MLE) is a fundamental statistical method used to estimate the parameters \(\theta\) of a model. In the context of supervised learning, we aim to model the conditional probability \(p_{\text{model}}(y | X, \theta)\), where \(y\) represents the target variable(s) and \(X\) represents the input features.

Likelihood Function: Assuming independent and identically distributed (i.i.d.) data samples \((x_i, y_i)\), the likelihood of observing the entire dataset \(\mathcal{D} = \{(x_1, y_1), \dots, (x_N, y_N)\}\) is given by: \[ L(\theta | X, Y) = P(Y | X, \theta) = \prod_{i=1}^{N} p_{\text{model}}(y_i | x_i, \theta) \]

Log-Likelihood: It is often more convenient to work with the log-likelihood, as it converts products into sums and does not change the location of the maximum: \[ \mathcal{L}(\theta | X, Y) = \log L(\theta | X, Y) = \sum_{i=1}^{N} \log p_{\text{model}}(y_i | x_i, \theta) \] In machine learning, the negative log-likelihood (NLL) is commonly used as a loss function to be minimized: \[ \text{Loss}(\theta) = - \mathcal{L}(\theta | X, Y) = - \sum_{i=1}^{N} \log p_{\text{model}}(y_i | x_i, \theta) \]

Gradient for Optimization: To find the parameters \(\theta\) that maximize the likelihood (or minimize the NLL), we typically take the derivative of the log-likelihood with respect to \(\theta\), set it to zero, and solve. For complex models, iterative optimization methods like gradient descent are used:

\[ \nabla_{\theta} \mathcal{L}(\theta | X, Y) = \sum_{i=1}^{N} \nabla_{\theta} \log p_{\text{model}}(y_i | x_i, \theta) \]

- Gradient of Sigmoid Activation: For a neuron with a sigmoid activation function \(\sigma(z) = \frac{1}{1 + e^{-z}}\), where \(z = w^T x + b\), its derivative with respect to \(z\) is: \[ \frac{d\sigma(z)}{dz} = \sigma(z)(1 - \sigma(z)) \]

- Gradient of Softmax Activation: For a softmax output layer \(S(z)_j = \frac{e^{z_j}}{\sum_k e^{z_k}}\), the derivative of the \(j\)-th softmax output with respect to the \(k\)-th input \(z_k\) (before softmax) is: \[ \frac{\partial S_j}{\partial z_k} = S_j (\delta_{jk} - S_k) \] where \(\delta_{jk}\) is the Kronecker delta (1 if \(j=k\), 0 otherwise). When combined with a cross-entropy loss, this derivative simplifies nicely.

MLE and Common Loss Functions:

- Gaussian Likelihood and Mean Squared Error (MSE): If we assume that the target variable \(y\) given input \(x\) follows a Gaussian distribution with mean \(f(x, \theta)\) (the model’s prediction) and constant variance \(\sigma^2\): \[ p_{\text{model}}(y_i | x_i, \theta) = \mathcal{N}(y_i | f(x_i, \theta), \sigma^2) = \frac{1}{\sqrt{2\pi\sigma^2}} \exp\left(-\frac{(y_i - f(x_i, \theta))^2}{2\sigma^2}\right) \] The negative log-likelihood for one sample is: \[ -\log p_{\text{model}}(y_i | x_i, \theta) = \frac{1}{2}\log(2\pi\sigma^2) + \frac{(y_i - f(x_i, \theta))^2}{2\sigma^2} \] Minimizing the sum over all samples, and ignoring constants, is equivalent to minimizing the Mean Squared Error (MSE): \[ \text{MSE} = \frac{1}{N} \sum_{i=1}^{N} (y_i - f(x_i, \theta))^2 \]

- Laplace Likelihood and Mean Absolute Error (MAE): If we assume \(y\) follows a Laplace distribution with mean \(f(x, \theta)\) and scale parameter \(b\): \[ p_{\text{model}}(y_i | x_i, \theta) = \text{Laplace}(y_i | f(x_i, \theta), b) = \frac{1}{2b} \exp\left(-\frac{|y_i - f(x_i, \theta)|}{b}\right) \] The negative log-likelihood for one sample is: \[ -\log p_{\text{model}}(y_i | x_i, \theta) = \log(2b) + \frac{|y_i - f(x_i, \theta)|}{b} \] Minimizing the sum over all samples, and ignoring constants, is equivalent to minimizing the Mean Absolute Error (MAE): \[ \text{MAE} = \frac{1}{N} \sum_{i=1}^{N} |y_i - f(x_i, \theta)| \]

MLE and Regularization (via Maximum A Posteriori - MAP):

- If we introduce a Gaussian prior distribution over the model weights \(\theta \sim \mathcal{N}(0, \alpha^2 I)\) and perform Maximum A Posteriori (MAP) estimation, this is equivalent to minimizing the NLL plus an \(L_2\) regularization term (Ridge regression): \[ \hat{\theta}_{MAP} = \arg\max_{\theta} \left( \sum_{i=1}^{N} \log p_{\text{model}}(y_i | x_i, \theta) + \log p(\theta) \right) \] This leads to a loss function like: \(\text{NLL} + \lambda ||\theta||_2^2\).

- If we introduce a Laplace prior over the model weights \(\theta \sim \text{Laplace}(0, \beta)\) and perform MAP estimation, this is equivalent to minimizing the NLL plus an \(L_1\) regularization term (Lasso regression): This leads to a loss function like: \(\text{NLL} + \lambda ||\theta||_1\).

Properties of MLE:

- Consistency: MLE is consistent, meaning that as the number of samples \(N\) approaches infinity, the MLE \(\hat{\theta}_{MLE}\) converges in probability to the true parameter value \(\theta_0\).

- Asymptotic Efficiency: MLE is asymptotically efficient. It achieves the Cramér-Rao lower bound, meaning it has the lowest possible variance among all asymptotically unbiased estimators as \(N \to \infty\).

- Asymptotic Normality: Under certain regularity conditions, the distribution of \(\hat{\theta}_{MLE}\) approaches a normal distribution as \(N \to \infty\).

Backpropagation

Backpropagation is the cornerstone algorithm for training multi-layer neural networks. It efficiently computes the gradient of the loss function with respect to the network’s weights by applying the chain rule of calculus.

Chain Rule: For a composite function, e.g., \(L(y, \hat{y})\) where \(\hat{y} = f(z)\) and \(z = g(W, x)\), the gradient of \(L\) with respect to weights \(W\) in an earlier layer is computed by propagating gradients backward from the output layer. If \(L\) is the loss, \(y^{(L)}\) is the output of layer \(L\), \(y^{(l)}\) is the output of layer \(l\), and \(W^{(l)}\) are the weights of layer \(l\), then:

\[ \frac{\partial L}{\partial W^{(l)}} = \frac{\partial L}{\partial y^{(L)}} \frac{\partial y^{(L)}}{\partial y^{(L-1)}} \dots \frac{\partial y^{(l+1)}}{\partial y^{(l)}} \frac{\partial y^{(l)}}{\partial W^{(l)}} \]

More precisely, for a given layer \(l\), the error \(\delta^{(l)}\) is propagated backwards:

\[ \delta^{(l)} = ((W^{(l+1)})^T \delta^{(l+1)}) \odot \sigma'(z^{(l)}) \]

where \(\sigma'\) is the derivative of the activation function at layer \(l\), \(z^{(l)}\) is the pre-activation output of layer \(l\), and \(\odot\) denotes element-wise product. The gradient with respect to weights \(W^{(l)}\) is then:

\[ \frac{\partial L}{\partial W^{(l)}} = \delta^{(l)} (a^{(l-1)})^T \]

where \(a^{(l-1)}\) is the activation from the previous layer.

Universal Approximation Theorem (UAT): This theorem provides a theoretical justification for the expressive power of neural networks.

- Statement (Cybenko 1989, Hornik et al. 1989): Let \(\sigma : \mathbb{R} \to \mathbb{R}\) be a non-constant, bounded, and continuous activation function. Let \(I_m\) denote the \(m\)-dimensional unit hypercube \([0,1]^m\). The space of continuous real-valued functions on \(I_m\) is denoted by \(C(I_m)\). Then, for any function \(f \in C(I_m)\) and any \(\epsilon > 0\), there exists an integer \(N\) (the number of neurons in the hidden layer), real constants \(v_i, b_i \in \mathbb{R}\), and real vectors \(w_i \in \mathbb{R}^m\) for \(i = 1, \dots, N\), such that if we define: \[ g(x) = \sum_{i=1}^{N} v_i \sigma(w_i^T x + b_i) \] then \(|g(x) - f(x)| < \epsilon\) for all \(x \in I_m\). (More formally, \(\sup_{x \in I_m} |g(x) - f(x)| < \epsilon\).)

- Implications: In essence, the theorem states that a feed-forward neural network with a single hidden layer containing a finite number of neurons and a suitable non-linear continuous activation function can approximate any continuous function defined on a compact subset of \(\mathbb{R}^m\) to an arbitrary degree of precision.

- Caveats:

- The theorem is an existence proof; it does not specify how to find the weights \(w_i, v_i\) or biases \(b_i\), nor does it guarantee that they can be learned by a particular algorithm like gradient descent.

- The number of neurons \(N\) required might be very large.

- It applies to continuous activation functions. For non-continuous ones like ReLU (Rectified Linear Unit), similar theorems exist.

Training Neural Networks

Regularization

Regularization encompasses a set of strategies designed to reduce a model’s test error (generalization error), sometimes at the cost of a slight increase in training error. The primary goal is to prevent overfitting, thereby improving the model’s performance on unseen data. Informally, these strategies often “make training harder” by constraining the model or introducing noise, compelling it to learn more robust and generalizable features.

- Definition: Techniques aimed at minimizing the generalization gap (the difference between test error and training error).

Parameter Norm Penalties

These methods aim to limit the model’s effective capacity by adding a penalty term to the loss function based on the magnitude of the model parameters (weights).

- L2 Regularization (Ridge Regression / Weight Decay):

- Adds a penalty proportional to the sum of the squares of the weights: \(L_{reg} = \lambda ||w||_2^2 = \lambda \sum_i w_i^2\).

- This encourages smaller, more diffuse weight values.

- The gradient of this penalty term with respect to a weight \(w_j\) is \(2 \lambda w_j\). In the weight update rule (\(w \leftarrow w - \eta (\nabla L_{data} + \nabla L_{reg})\)), this results in \(w_j \leftarrow w_j - \eta ( \dots + 2 \lambda w_j ) = w_j (1 - 2 \eta \lambda) - \dots\), effectively shrinking the weights by a constant factor in each step (hence “weight decay”).

- L1 Regularization (Lasso):

- Adds a penalty proportional to the sum of the absolute values of the weights: \(L_{reg} = \lambda ||w||_1 = \lambda \sum_i |w_i|\).

- This is known for inducing sparsity, meaning it tends to drive many weights to exactly zero, effectively performing feature selection.

- The gradient of this penalty term with respect to \(w_j\) is \(\lambda \text{sign}(w_j)\) (for \(w_j \neq 0\)). The update rule subtracts a constant value (\(\eta \lambda\)) from positive weights and adds it to negative weights, pushing them towards zero, irrespective of their current magnitude (as long as they are non-zero).

- Interpretations:

- Bayesian Prior: Parameter norm penalties can be interpreted as imposing a prior distribution on the weights in a Maximum A Posteriori (MAP) estimation framework. L2 regularization corresponds to a Gaussian prior, while L1 regularization corresponds to a Laplace prior.

- Constrained Optimization: They are also equivalent to solving a constrained optimization problem where the weights are constrained to lie within an \(L_2\) ball (for L2) or an \(L_1\) ball (for L1).

Ensemble Methods

Ensemble methods combine predictions from multiple models to achieve better performance and robustness than any single constituent model.

- General Idea: Train several different models and then average their predictions (for regression) or take a majority vote (for classification).

- Sources of Diversity:

- Train different model architectures on the same data.

- Train the same model architecture on different subsets of the data or with different initializations.

- Bagging (Bootstrap Aggregating):

- Trains multiple instances of the same base model on different bootstrap samples (random subsets of the training data, sampled with replacement).

- Primarily reduces variance and helps prevent overfitting. Random Forests are a prominent example.

- Boosting:

- Trains multiple base models sequentially. Each new model focuses on correcting the errors made by the previous models (e.g., by up-weighting misclassified examples).

- Primarily reduces bias. Examples include AdaBoost, Gradient Boosting Machines (GBMs), and XGBoost.

Dropout

Dropout is a regularization technique specifically for neural networks that prevents complex co-adaptations of neurons.

- Mechanism: During training, for each forward pass, a fraction of neuron outputs in a layer are randomly set to zero (dropped out). The dropout probability (e.g., \(p=0.5\)) is a hyperparameter.

- Ensemble Interpretation: Dropout can be viewed as implicitly training a large ensemble of thinned networks (networks with different subsets of neurons).

- Inference Time:

- Weight Scaling: The most common approach is to use the full network at test time, but scale down the weights of the dropout layers by the dropout probability \(p\) (or, more commonly, scale up activations by \(1/(1-p)\) during training – known as inverted dropout – so no scaling is needed at test time). This approximates averaging the predictions of the exponentially many thinned networks.

- Monte Carlo (MC) Dropout: Alternatively, one can perform multiple forward passes with dropout still active at test time and average the predictions. This provides an estimate of model uncertainty.

Data Normalization

Normalizing input data can stabilize and accelerate training.

- Motivation: Large input values or features with vastly different scales can lead to correspondingly large weight updates and an ill-conditioned loss landscape, potentially causing training instability or slow convergence.

- Common Practice: Standardize features to have zero mean and unit variance: \(x'_{ij} = (x_{ij} - \mu_j) / \sigma_j\), where \(\mu_j\) and \(\sigma_j\) are the mean and standard deviation of feature \(j\) over the training set.

- Test Time: The same mean and standard deviation values computed from the training data must be used to normalize test data to prevent data leakage and ensure consistency.

Batch Normalization (BN)

Batch Normalization normalizes the activations within the network, layer by layer, to address the problem of internal covariate shift.

- Mechanism:

- For each mini-batch during training, compute the mean \(\mu_{\mathcal{B}}\) and variance \(\sigma_{\mathcal{B}}^2\) of the activations for each feature/channel, along the batch dimension.

- Normalize the activations: \(\hat{x}_i = (x_i - \mu_{\mathcal{B}}) / \sqrt{\sigma_{\mathcal{B}}^2 + \epsilon}\).

- Scale and shift the normalized activations using learned parameters \(\gamma\) (scale) and \(\beta\) (shift): \(y_i = \gamma \hat{x}_i + \beta\). These parameters allow the network to learn the optimal distribution for activations, potentially undoing the normalization if necessary.

- Inference Time: During inference, the mini-batch statistics are unavailable. Instead, population statistics (running means and variances) estimated during training (e.g., via exponential moving averages of mini-batch means/variances) are used for normalization.

- Benefits:

- Reduces internal covariate shift (changes in the distribution of layer inputs during training), stabilizing learning.

- Allows for higher learning rates and can make training less sensitive to weight initialization.

- The bias terms in layers preceding BN become redundant (as \(\beta\) can fulfill their role) and can often be omitted.

- The stochasticity introduced by using mini-batch statistics for normalization has a slight regularization effect.

Data Augmentation

Data augmentation artificially expands the training dataset by creating modified copies of existing data or synthesizing new data.

- Principle: More diverse training data generally leads to better generalization and robustness.

- Technique: Apply transformations to input data while preserving their labels.

- Examples for Images:

- Geometric Transformations: Rotation, scaling, translation, shearing, flipping.

- Color Jittering: Adjusting brightness, contrast, saturation, hue.

- Noise Injection: Adding random noise (e.g., Gaussian noise) to inputs can enforce robustness against small perturbations.

Transfer Learning

Transfer learning leverages knowledge gained from solving one problem to improve performance on a different but related problem.

- Process:

- Pre-training: A model is trained on a large-scale dataset (e.g., ImageNet for computer vision tasks). This model learns general-purpose features.

- Fine-tuning: The pre-trained model (or parts of it) is then adapted to a smaller, target dataset. This typically involves replacing the final classification layer and re-training some or all of the network’s layers with a smaller learning rate.

- Using Pre-trained Models: One can directly use well-known architectures (e.g., ResNet, VGG, BERT) that have been pre-trained on extensive datasets, saving significant training time and resources.

Activation Functions

Activation functions introduce non-linearities into the network, enabling it to learn complex patterns.

- Sigmoid: \(f(x) = 1 / (1 + e^{-x})\)

- Output range: \((0, 1)\).

- Saturates for large positive or negative inputs, leading to vanishing gradients (gradients close to zero), which can slow down or stall learning in deep networks.

- Not zero-centered, which can be a minor issue for gradient updates.

- Tanh (Hyperbolic Tangent): \(f(x) = (e^x - e^{-x}) / (e^x + e^{-x})\)

- Output range: \((-1, 1)\).

- Zero-centered, which is generally preferred over Sigmoid.

- Also saturates and suffers from vanishing gradients, though its gradients are typically larger than Sigmoid’s around zero.

- ReLU (Rectified Linear Unit): \(f(x) = \max(0, x)\)

- Non-saturating in the positive domain, which helps alleviate the vanishing gradient problem and accelerates convergence.

- Computationally efficient.

- Can lead to “dead neurons”: if a neuron’s input is consistently negative, it will always output zero, and its gradient will also be zero, preventing weight updates for that neuron.

- Unbounded in the positive direction.

- Leaky ReLU (LReLU) / Parametric ReLU (PReLU): \(f(x) = \max(\alpha x, x)\)

- Addresses the dead neuron problem by allowing a small, non-zero gradient when the unit is not active (e.g., \(\alpha = 0.01\) for LReLU).

- In PReLU, \(\alpha\) is a learnable parameter.

- Randomized Leaky ReLU (RReLU) samples \(\alpha\) from a distribution during training.

- GELU (Gaussian Error Linear Unit): \(f(x) = x \Phi(x)\), where \(\Phi(x)\) is the cumulative distribution function (CDF) of the standard Gaussian distribution.

- A smooth approximation of ReLU, often performing well in Transformer models.

- Approximation: \(f(x) \approx 0.5x (1 + \tanh[\sqrt{2/\pi}(x + 0.044715x^3)])\).

- Non-saturating in the positive domain.

- Initialization: Proper weight initialization techniques (e.g., He initialization for ReLU-like activations) can also help mitigate issues like dead neurons and improve training stability.

Optimization Algorithms

Optimization algorithms adjust the model’s parameters (weights and biases) to minimize the loss function.

- Gradient Descent Variants:

- Batch Gradient Descent (Full GD): Computes the gradient using the entire training dataset. Accurate but computationally expensive for large datasets and can get stuck in local minima.

- Stochastic Gradient Descent (SGD): Computes the gradient using a single training example at a time. Faster updates, noisy gradients can help escape local minima, but high variance in updates.

- Mini-Batch Gradient Descent: Computes the gradient using a small batch of training examples. Strikes a balance between the accuracy of full GD and the efficiency/stochasticity of SGD. This is the most common approach.

Momentum-Based Methods

These methods accelerate SGD by incorporating information from past updates.

- Polyak’s Momentum (Heavy Ball Method):

- Addresses oscillations in SGD, particularly in ravines (areas where the surface curves more steeply in one dimension than another).

- Maintains an exponentially moving average (EMA) of past gradients, which acts as a “velocity” term.

- Update equations: Let \(g_t = \nabla_{\theta} J(\theta_t)\) be the gradient at time \(t\). Velocity (EMA of gradients): \(v_t = \beta v_{t-1} + (1 - \beta) g_t\) Parameter update: \(\theta_{t+1} = \theta_t - \eta v_t\) where \(\beta\) is the momentum coefficient (e.g., 0.9) and \(\eta\) is the learning rate.

- Convergence: While standard SGD with momentum is widely used and effective for non-convex optimization, its convergence properties can be complex. Polyak’s original heavy ball method has convergence guarantees for strongly convex functions.

- Nesterov Accelerated Gradient (NAG):

- A modification of momentum that provides a “lookahead” capability. It calculates the gradient not at the current position \(\theta_t\), but at an approximated future position \(\theta_t - \eta \beta v_{t-1}\) (where the parameters would be after applying the previous momentum). This allows for a more informed gradient step.

- Update equations (one common formulation): Lookahead point: \(\theta_{lookahead} = \theta_t - \eta_{lr} \beta v_{t-1}\) Gradient at lookahead: \(g_{lookahead} = \nabla J(\theta_{lookahead})\) Velocity EMA: \(v_t = \beta v_{t-1} + (1-\beta) g_{lookahead}\) Parameter update: \(\theta_{t+1} = \theta_t - \eta_{lr} v_t\)

- Alternatively, a common implementation form (e.g., Hinton et al.): \(v_{t+1} = \mu v_t - \epsilon \nabla J(\theta_t + \mu v_t)\) \(\theta_{t+1} = \theta_t + v_{t+1}\) where \(\mu\) is momentum and \(\epsilon\) is learning rate.

- The “lookahead” often provides faster convergence and better performance than standard momentum. The computation of gradients at different points can be re-written through a change of variables for implementation.

Adaptive Learning Rate Methods

These methods adjust the learning rate for each parameter individually based on the history of gradients.

Adagrad (Adaptive Gradient Algorithm):

- Adapts the learning rate for each parameter, performing larger updates for infrequent parameters and smaller updates for frequent parameters.

- Accumulates the sum of squared past gradients for each parameter.

- Update for parameter \(\theta_i\): \[ G_{t,ii} = G_{t-1,ii} + g_{t,i}^2 \] \[ \theta_{t+1, i} = \theta_{t, i} - \frac{\eta}{\sqrt{G_{t,ii} + \epsilon}} g_{t,i} \] where \(g_{t,i}\) is the gradient of the loss with respect to \(\theta_i\) at time \(t\), \(G_t\) is a diagonal matrix where each diagonal element \(G_{t,ii}\) is the sum of squares of past gradients for \(\theta_i\), \(\eta\) is a global learning rate, and \(\epsilon\) is a small constant for numerical stability.

- Strength: Good for sparse data.

- Weakness: The learning rate monotonically decreases and can become too small prematurely, effectively stopping learning.

RMSProp (Root Mean Square Propagation):

- Addresses Adagrad’s aggressively diminishing learning rates by using an exponentially decaying moving average of squared gradients instead of summing all past squared gradients.

- Update for parameter \(\theta_i\): \[ E[g^2]_{t,i} = \gamma E[g^2]_{t-1,i} + (1-\gamma) g_{t,i}^2 \] \[ \theta_{t+1, i} = \theta_{t, i} - \frac{\eta}{\sqrt{E[g^2]_{t,i} + \epsilon}} g_{t,i} \] where \(E[g^2]_{t,i}\) is the moving average of squared gradients for \(\theta_i\), and \(\gamma\) is the decay rate (e.g., 0.9).

Adam (Adaptive Moment Estimation):

- Combines the ideas of momentum (using an EMA of past gradients, first moment) and adaptive learning rates (using an EMA of past squared gradients, second moment, similar to RMSProp).

- Includes bias correction terms for the first and second moment estimates, which are particularly important during the initial stages of training when the EMAs are initialized at zero.

- Update equations: \(m_t = \beta_1 m_{t-1} + (1-\beta_1) g_t\) (EMA of gradients - 1st moment) \(v_t = \beta_2 v_{t-1} + (1-\beta_2) g_t^2\) (EMA of squared gradients - 2nd moment) Bias-corrected estimates: \[ \hat{m}_t = \frac{m_t}{1 - \beta_1^t} \] \[ \hat{v}_t = \frac{v_t}{1 - \beta_2^t} \] Parameter update: \[ \theta_{t+1} = \theta_t - \frac{\eta}{\sqrt{\hat{v}_t} + \epsilon} \hat{m}_t \] where \(\beta_1\) (e.g., 0.9) and \(\beta_2\) (e.g., 0.999) are decay rates for the moment estimates. Adam is often a good default choice for many problems.

Learning Rate Schedules

A learning rate schedule dynamically adjusts the learning rate during training.

- Step Decay: Reduces the learning rate by a fixed factor (e.g., 0.1) every few epochs.

- Exponential Decay: Reduces the learning rate exponentially: \(\eta_t = \eta_0 \exp(-kt)\), where \(k\) is a decay rate.

- Time-Based Decay: Reduces the learning rate as a function of iteration number, e.g., \(\eta_t = \eta_0 / (1 + kt)\).

- Cosine Annealing: Varies the learning rate according to a cosine function, typically from an initial high value \(\eta_{max}\) down to a minimum value \(\eta_{min}\) (often 0) over a specified number of epochs (a “cycle”). \[ \eta_t = \eta_{min} + \frac{1}{2}(\eta_{max} - \eta_{min})\left(1 + \cos\left(\frac{T_{cur}}{T_{cycle}}\pi\right)\right) \] where \(T_{cur}\) is the current epoch/iteration within the cycle and \(T_{cycle}\) is the total duration of the cycle. This schedule smoothly decreases the learning rate. It can be used with “restarts” (SGDR - Stochastic Gradient Descent with Warm Restarts), where the cosine cycle is repeated multiple times.

Advanced Ensemble and Weight Averaging Techniques

Beyond basic bagging and boosting, several techniques leverage model weights or training dynamics for ensembling.

- Snapshot Ensembling: Train a single model, but save “snapshots” (checkpoints) of its weights at different points during training (often at local minima found by a cyclical learning rate schedule). These snapshots are then ensembled.

- Polyak-Ruppert Averaging (or EMA of weights): Maintain an exponential moving average of the model’s weights during the later stages of training. This averaged model often generalizes better.

Fast Geometric Ensembling (FGE)

FGE leverages the observation that local minima in the loss landscape of deep neural networks are often connected by simple, low-loss paths.

- Concept: Train a single network using a cyclical learning rate schedule (e.g., multiple cycles of cosine annealing).

- Procedure:

- Define a learning rate schedule with multiple cycles (e.g., \(M\) cycles over \(T\) total epochs). Each cycle involves a period of higher learning rate followed by a decrease to a very low learning rate.

- At the end of each cycle, when the learning rate is at its minimum (allowing the model to converge to a local optimum), save a snapshot of the model weights.

- The ensemble consists of these \(M\) saved models. Their predictions are averaged at inference time.

- Benefit: Allows finding multiple diverse, high-performing models with roughly the same computational cost as training a single model.

Stochastic Weight Averaging (SWA)

SWA is a simpler approximation that often yields similar benefits to FGE, leading to wider optima and better generalization.

- Procedure:

- Initial Training: Train a model using a standard learning rate schedule for a certain number of epochs (e.g., 75% of the total training budget).

- SWA Phase: For the remaining epochs (e.g., 25%), switch to a modified learning rate schedule:

- Typically a constant high learning rate or a cyclical schedule with a relatively high average (e.g., short cosine annealing cycles).

- Weight Collection: During this SWA phase, collect model weights at regular intervals (e.g., at the end of each epoch or every few iterations).

- Averaging: Compute the average of these collected weights: \(w_{SWA} = \frac{1}{N_{models}} \sum_{i=1}^{N_{models}} w_i\).

- Batch Normalization Update: After obtaining \(w_{SWA}\), perform a forward pass over the entire training data using the \(w_{SWA}\) model to recompute the Batch Normalization running statistics. This is crucial for good performance.

- Inference: Use the \(w_{SWA}\) model for predictions.

- Advantages: Easy to implement and often provides a significant performance boost with minimal additional computational cost beyond the initial training.

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are a class of deep neural networks particularly well-suited for processing grid-like data, such as images.

- Wide Range of Applications:

- Image classification, object localization, instance segmentation, semantic segmentation, 3D pose estimation, eye gaze estimation, dynamic gesture recognition, and more.

- Biological Inspiration:

- CNNs are inspired by the organization of the animal visual cortex.

- Hubel and Wiesel (1950s-1960s) discovered a hierarchy in the visual cortex:

- Simple cells: Respond to specific features (e.g., oriented edges) at particular locations; susceptible to noise.

- Complex cells: Aggregate responses from simple cells, providing spatial invariance and robustness.

- Neocognitron (Fukushima, 1980):

- Early hierarchical neural network model for visual pattern recognition.

- S-cells: Simple cells, analogous to convolutional filters.

- C-cells: Complex cells, analogous to pooling layers.

The Convolution Operation in CNNs

- Properties:

- Linear transformation.

- Shift equivariance: Shifting the input shifts the output in the same way.

- Mathematical Definition (Convolution):

- Given input image \(I\) and kernel \(K\), the output \(I'\) is: \[ I'(i, j) = \sum_{m} \sum_{n} K(m, n) \, I(i - m, j - n) \]

- Cross-Correlation (as used in most deep learning libraries): \[

I'(i, j) = \sum_{m} \sum_{n} K(m, n) \, I(i + m, j + n)

\]

- True convolution is cross-correlation with a 180-degree rotated kernel: \(I * K = I \star K_{\text{flipped}}\), where \(K_{\text{flipped}}(m, n) = K(-m, -n)\).

- Commutativity: Convolution is commutative (\(I * K = K * I\)), cross-correlation is not.

CNN Architecture Overview

A typical CNN consists of:

- Convolutional layers: Learn spatial features.

- Activation functions: Non-linearities (e.g., ReLU, sigmoid, tanh).

- Pooling layers: Downsample feature maps.

- Fully connected layers: Used at the end for classification/regression.

Mathematical Derivation of a Convolutional Layer

Let \(z^{[l-1]}_{u, v}\) be the output of layer \(l-1\) at position \((u, v)\). Let \(w^{[l]}_{m, n}\) be the filter weights, and \(b^{[l]}\) the bias.

Forward Pass:

\[ z^{[l]}_{i, j} = \sum_{m} \sum_{n} w^{[l]}_{m, n} \, z^{[l-1]}_{i - m, j - n} + b^{[l]} \]

Backward Pass:

Let \(L\) be the loss, and \(\delta^{[l]}_{i, j} = \frac{\partial L}{\partial z^{[l]}_{i, j}}\).

Gradient w.r.t. previous layer activations:

\[ \delta^{[l-1]}_{i, j} = \sum_{m} \sum_{n} \delta^{[l]}_{i + m, j + n} \, w^{[l]}_{m, n} \]

- This is equivalent to convolving \(\delta^{[l]}\) with the kernel \(w^{[l]}\) rotated by 180 degrees: \[ \delta^{[l-1]} = \delta^{[l]} * \text{rot180}(w^{[l]}) \]

Gradient w.r.t. weights:

\[ \frac{\partial L}{\partial w^{[l]}_{m, n}} = \sum_{i} \sum_{j} \delta^{[l]}_{i, j} \, z^{[l-1]}_{i - m, j - n} \]

- This is the cross-correlation of \(z^{[l-1]}\) with \(\delta^{[l]}\).

Gradient w.r.t. bias: \[ \frac{\partial L}{\partial b^{[l]}} = \sum_{i} \sum_{j} \delta^{[l]}_{i, j} \]

Pooling Layers

- Purpose: Downsample feature maps, reduce computation, and provide translation invariance.

- Max pooling: Takes the maximum value in a local region.

- No parameters.

- During backpropagation, the gradient is passed only to the input that had the maximum value.

- Average pooling: Takes the average value in a local region.

- Modern trend: Strided convolutions often replace pooling.

Convolution and Cross-Correlation Identities

- \(A * \text{rot180}(B) = A \star B\)

- \(A \star B = \text{rot180}(B) * A\)

Fully Convolutional Networks (FCNs)

- Semantic segmentation: Classify each pixel.

- Input and output dimensions: Typically the same.

- Architecture: Downsample (encoder), then upsample (decoder).

- Upsampling methods:

- Fixed: Nearest neighbor, “bed of nails”, max unpooling.

- Learnable: Transposed convolution.

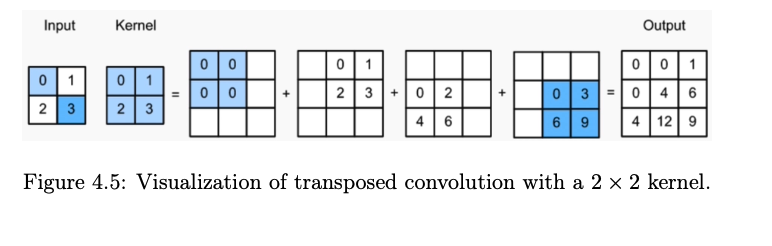

Transposed Convolution

- Also called: Fractionally strided convolution, “deconvolution” (not a true inverse).

- Mechanism:

- Upsample by inserting zeros between input elements.

- Apply a standard convolution to the upsampled input.

- Output size for input height \(H_{in}\), kernel size \(k\), stride \(s\), and padding \(p\): \[ H_{out} = s (H_{in} - 1) + k - 2p \]

- Visualization: Each input value is multiplied by the kernel and “spread” over the output, overlapping values are summed.

U-Net Architecture

- Symmetric encoder-decoder: Contracting path (encoder) for context, expanding path (decoder) for localization.

- Skip connections: Concatenate encoder features with decoder features at corresponding resolutions for better localization.

- Applications: Semantic segmentation, image generation from segmentation maps, 3D human pose/shape estimation, and more.

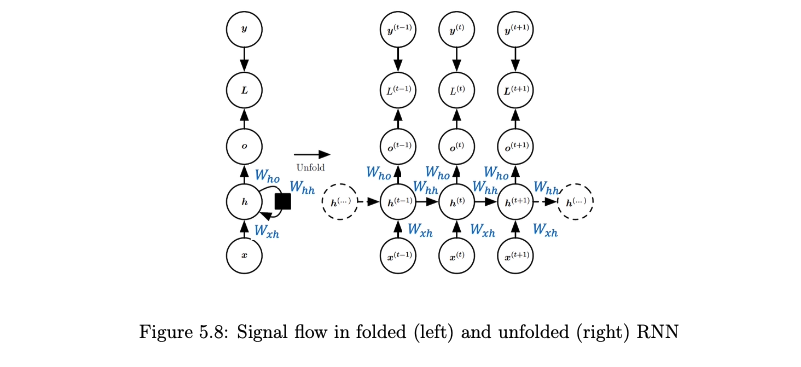

Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are a class of artificial neural networks designed specifically for processing sequential data. Unlike feedforward neural networks, RNNs possess internal memory (state) that allows them to capture temporal dependencies and patterns over time.

Key Characteristics:

- Sequential Data Processing: Suited for tasks where the order of input elements matters, such as time series, natural language, speech, and video.

- Variable-Length Sequences: Can naturally handle input and output sequences of varying lengths.

- Internal State (Memory): Maintain a hidden state \(h^t\) that is updated at each time step, summarizing information from past inputs in the sequence. This state is recurrently fed back into the network.

- Weight Sharing: The same set of weights is used across all time steps for processing different elements of the sequence. This allows the model to generalize to sequences of different lengths and learn patterns that are consistent across time.

Common RNN Architectures based on Input/Output Mapping:

- One-to-One: Standard feedforward neural network (not typically considered an RNN in this context, but serves as a baseline).

- Example: Image classification (single input, single output).

- One-to-Many: A single input generates a sequence of outputs.

- Example: Image captioning (input: image; output: sequence of words).

- Example: Music generation from a seed note.

- Many-to-One: A sequence of inputs generates a single output.

- Example: Sentiment analysis (input: sequence of words; output: sentiment label).

- Example: Sequence classification (e.g., classifying a video based on its frames).

- Many-to-Many (Synchronous): A sequence of inputs generates a corresponding sequence of outputs, with alignment between input and output at each step.

- Example: Video frame classification (classifying each frame in a video).

- Example: Part-of-speech tagging (tagging each word in a sentence).

- Many-to-Many (Asynchronous/Delayed): A sequence of inputs generates a sequence of outputs, but the lengths may differ, and alignment is not necessarily one-to-one at each step.

- Example: Machine translation (input: sentence in one language; output: sentence in another language).

- Example: Speech recognition (input: audio sequence; output: sequence of words).

- One-to-One: Standard feedforward neural network (not typically considered an RNN in this context, but serves as a baseline).

RNNs as Dynamical Systems

RNNs can be viewed as discrete-time dynamical systems.

- Dynamical System Definition: A mathematical model describing the temporal evolution of a system’s state. The state at a given time is a function of its previous state and any external inputs.

- Continuous-time dynamical systems are often described by differential equations (e.g., \(\frac{dh(t)}{dt} = f(h(t), x(t))\)).

- RNNs are discrete-time dynamical systems, where the state \(h^t\) evolves at discrete time steps \(t\). The evolution is defined by a recurrence relation: \[ h^t = f(h^{t-1}, x^t; \theta) \] where \(h^t\) is the hidden state at time \(t\), \(h^{t-1}\) is the hidden state at the previous time step, \(x^t\) is the input at time \(t\), and \(\theta\) represents the model parameters (weights and biases).

- Relation to Hidden Markov Models (HMMs): While both RNNs and HMMs model sequences and have hidden states, they are different concepts. HMMs are probabilistic graphical models with discrete states and explicit transition/emission probabilities. RNNs are connectionist models with continuous hidden states and learn transitions implicitly through neural network functions. HMMs are a specific type of dynamical system, but not all discrete dynamical systems are HMMs.

Vanilla RNN

The “vanilla” RNN is the simplest form of a recurrent network.

State Update Equation: The hidden state \(h^t\) is computed as a non-linear function of the previous hidden state \(h^{t-1}\) and the current input \(x^t\):

\[ h^t = \tanh(W_{hh} h^{t-1} + W_{xh} x^t + b_h) \]

where:

- \(x^t\) is the input vector at time step \(t\).

- \(h^{t-1}\) is the hidden state vector from the previous time step \(t-1\).

- \(W_{xh}\) is the weight matrix connecting the input to the hidden layer.

- \(W_{hh}\) is the weight matrix connecting the previous hidden state to the current hidden state (the recurrent weights).

- \(b_h\) is the bias vector for the hidden layer.

- \(\tanh\) is the hyperbolic tangent activation function.

Output Equation: The output \(\hat{y}^t\) at time step \(t\) is typically computed from the current hidden state:

\[ \hat{y}^t = W_{hy} h^t + b_y \]

where:

- \(W_{hy}\) is the weight matrix connecting the hidden layer to the output layer.

- \(b_y\) is the bias vector for the output layer.

Weight Sharing: The weight matrices (\(W_{xh}, W_{hh}, W_{hy}\)) and biases (\(b_h, b_y\)) are shared across all time steps.

- Training: Backpropagation Through Time (BPTT)

- BPTT unrolls the RNN through time for a sequence of length \(S\) and applies backpropagation.

- The total loss \(L\) is the sum of losses \(L^t\) at each time step (e.g., \(L^t = \|\hat{y}^t - y^t\|^2\)).

- The gradient of the total loss \(L\) with respect to any weight matrix \(W\) is: \[ \frac{\partial L}{\partial W} = \sum_{t=1}^{S} \frac{\partial L^t}{\partial W} \]

- The gradient of the loss at a specific time step \(t\), \(L^t\), with respect to \(W\) is found by summing influences through all intermediate hidden states \(h^k\): \[ \frac{\partial L^t}{\partial W} = \sum_{k=1}^{t} \frac{\partial L^t}{\partial \hat{y}^t} \frac{\partial \hat{y}^t}{\partial h^t} \frac{\partial h^t}{\partial h^k} \frac{\partial^+ h^k}{\partial W} \] where \(\frac{\partial^+ h^k}{\partial W}\) is the “immediate” partial derivative.

- Vanishing/Exploding Gradients: The term \(\frac{\partial h^t}{\partial h^k}\) is critical: \[

\frac{\partial h^t}{\partial h^k} = \prod_{i=k+1}^{t} \frac{\partial h^i}{\partial h^{i-1}}

\] Given the state update \(h^i = \tanh(W_{hh} h^{i-1} + W_{xh} x^i + b_h)\), the Jacobian is: \[

\frac{\partial h^i}{\partial h^{i-1}} = W_{hh} \operatorname{diag}(1 - (h^{i-1})^2)

\] where \(\operatorname{diag}(1 - (h^{i-1})^2)\) is a diagonal matrix with entries \(1 - (h^{i-1}_j)^2\). Thus, the product becomes: \[

\frac{\partial h^t}{\partial h^k} = \prod_{i=k+1}^{t} \left( W_{hh} \operatorname{diag}(1 - (h^{i-1})^2) \right)

\] This product involves repeated multiplication of \(W_{hh}\) (and diagonal matrices).

- If the magnitudes of eigenvalues/singular values of \(W_{hh}\) are consistently greater than 1, the norm of this product can grow exponentially with \(t-k\), leading to exploding gradients.

- If they are consistently less than 1, the norm can shrink exponentially, leading to vanishing gradients.

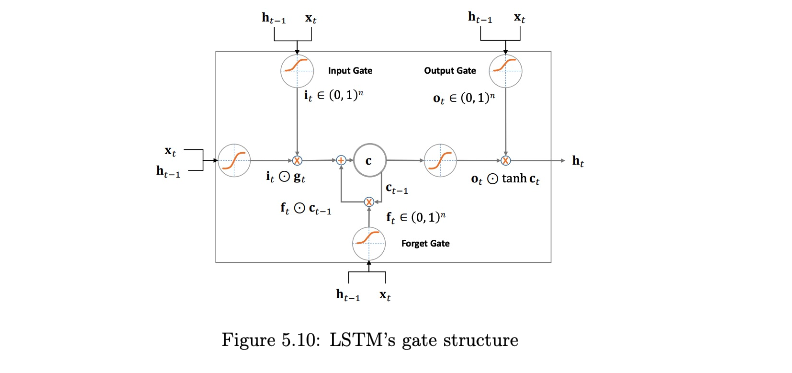

Long Short-Term Memory (LSTM)

LSTMs were introduced by Sepp Hochreiter and Jürgen Schmidhuber in 1997 to address the vanishing gradient problem in vanilla RNNs. They achieve this through a more complex internal cell structure involving gating mechanisms.

- Core Idea: LSTMs use gates (input, forget, output) to control the flow of information into and out of a cell state \(c_t\). The cell state acts as a memory conveyor belt, allowing information to propagate over long durations with minimal decay.

Matrix Representation for Gates and Cell Update: Let \(x_t\) be the current input and \(h_{t-1}\) be the previous hidden state. The core computations for the gates (forget \(f_t\), input \(i_t\), output \(o_t\)) and the candidate cell state \(\tilde{c}_t\) can be combined:

\[ \begin{pmatrix} i_t \\ f_t \\ o_t \\ \tilde{c}_t \end{pmatrix} = \begin{pmatrix} \sigma \\ \sigma \\ \sigma \\ \tanh \end{pmatrix} \left( W \begin{bmatrix} x_t \\ h_{t-1} \end{bmatrix} + b \right) \]

where \(W\) is a block weight matrix and \(b\) is a block bias vector. The cell state \(c_t\) and hidden state \(h_t\) are then updated:

\[ c_t = f_t \odot c_{t-1} + i_t \odot \tilde{c}_t \]

\[ h_t = o_t \odot \tanh(c_t) \]

where \(\sigma\) is the sigmoid function, \(\tanh\) is the hyperbolic tangent function, and \(\odot\) denotes element-wise multiplication.

Stacked LSTMs (Multiple Layers): LSTMs can be stacked to create deeper recurrent networks. The hidden state \(h_t^{[l]}\) of layer \(l\) at time \(t\) becomes the input \(x_t^{[l+1]}\) for layer \(l+1\) at the same time step \(t\).

Gated Recurrent Unit (GRU)

The Gated Recurrent Unit (GRU), introduced by Cho et al. in 2014, is a variation of the LSTM with a simpler architecture and fewer parameters. It often performs comparably to LSTMs on many tasks.

- Key Differences from LSTM:

- It combines the forget and input gates of an LSTM into a single update gate.

- It merges the cell state and hidden state, resulting in only one state vector being passed between time steps.

- It uses a reset gate to control how much of the previous state information is incorporated into the candidate state.

- GRUs are generally computationally slightly more efficient than LSTMs due to having fewer gates and parameters. The choice between LSTM and GRU often depends on the specific dataset and task, with empirical evaluation being the best guide.

Gradient Clipping

Gradient clipping is a common technique used during the training of RNNs (and other deep networks) to mitigate the exploding gradient problem.

- Mechanism:

- If the L2 norm of the gradient vector \(g = \frac{\partial L}{\partial W}\) (where \(W\) represents all parameters, or parameters of a specific layer) exceeds a predefined threshold \(\tau\), the gradient is scaled down to have a norm equal to \(\tau\).

- If \(\|g\|_2 > \tau\), then \(g \leftarrow \frac{\tau}{\|g\|_2} g\).

- If \(\|g\|_2 \le \tau\), then \(g\) remains unchanged.

- Purpose: It prevents excessively large gradient updates that can destabilize the training process, without changing the direction of the gradient. It does not directly solve the vanishing gradient problem.

Generative Models

- The primary objective of generative modeling is to learn the underlying probability distribution \(p(\text{data})\) of a dataset—either explicitly or implicitly—so that we can sample new data points from it.

- Implicit Models: Define a stochastic procedure to generate samples without an explicit density.

- Examples: Generative Adversarial Networks (GANs), Markov Chains.

- Explicit Models: Specify a parametric form for the density \(p(\text{data})\).

- Tractable Explicit Models: The density can be evaluated directly.

- Examples: Autoregressive models (PixelRNN, PixelCNN), Normalizing Flows.

- Approximate Explicit Models: The density is intractable; use approximations.

- Examples: Variational Autoencoders (VAEs), Boltzmann Machines (via MCMC).

- Tractable Explicit Models: The density can be evaluated directly.

- Implicit Models: Define a stochastic procedure to generate samples without an explicit density.

Autoencoders (AEs)

- Autoencoders are neural networks for unsupervised representation learning and dimensionality reduction.

- Encoder \(f_{\phi}\): maps \(x\) to latent \(z = f_{\phi}(x)\).

- Decoder \(g_{\theta}\): reconstructs \(\hat{x} = g_{\theta}(z)\) from \(z\).

- Assumes data lie on a low-dimensional manifold embedded in the input space.

- Desirable latent properties:

- Smoothness: small changes in \(z\) yield small, meaningful changes in \(\hat{x}\).

- Disentanglement: each dimension of \(z\) corresponds to a distinct factor of variation.

- PCA as a Linear Autoencoder: PCA minimizes \(L_2\) reconstruction loss under orthonormality constraints.

- Nonlinear Autoencoders: Use neural nets, optimize \(\|x - \hat{x}\|_2^2\) (MSE).

- Latent Dimension:

- Undercomplete: \(\dim(Z) < \dim(X)\) for compression.

- Overcomplete: \(\dim(Z) \ge \dim(X)\) (e.g. denoising, sparse coding).

- Denoising Autoencoders: Train to reconstruct clean \(x\) from corrupted \(x'\), loss computed on \(x\).

- Limitation: Standard AEs lack a structured latent space; sampling arbitrary \(z\) often yields poor outputs.

Variational Autoencoders (VAEs)

VAEs learn a continuous, structured latent space suitable for generation.

Encoder (Recognition Model) \(q_{\phi}(z|x)\):

- Outputs parameters of a Gaussian: mean \(\mu_{\phi}(x)\) and log-variance \(\log \sigma^2_{\phi}(x)\).

- Defines \(q_{\phi}(z|x) = \mathcal{N}(z; \mu_{\phi}(x), \mathrm{diag}(\sigma^2_{\phi}(x)))\).

Reparameterization Trick:

- Sample \(\epsilon \sim \mathcal{N}(0,I)\).

- Set \(z = \mu_{\phi}(x) + \sigma_{\phi}(x)\odot \epsilon\), permitting backpropagation.

Regularization & Posterior Collapse:

- Without regularization, \(\sigma_{\phi}(x)\to 0\) → AE collapse.

- Posterior collapse: decoder ignores \(z\).

- Remedy: add \(D_{KL}(q_{\phi}(z|x)\|p(z))\) to the loss, where \(p(z)=\mathcal{N}(0,I)\).

Derivation of the Objective Function (Evidence Lower Bound - ELBO):

- Goal: maximize the marginal likelihood \[ p_{\theta}(x) = \int p_{\theta}(x,z)\,dz. \]

- Insert approximate posterior \(q_{\phi}(z|x)\): \[ \log p_{\theta}(x) = \log \int q_{\phi}(z|x)\,\frac{p_{\theta}(x,z)}{q_{\phi}(z|x)}\,dz = \log \mathbb{E}_{q_{\phi}(z|x)}\Bigl[\tfrac{p_{\theta}(x,z)}{q_{\phi}(z|x)}\Bigr]. \]

- Apply Jensen’s inequality (\(\log\) concave): \[ \log p_{\theta}(x) \;\ge\; \mathbb{E}_{q_{\phi}(z|x)}\Bigl[\log\tfrac{p_{\theta}(x,z)}{q_{\phi}(z|x)}\Bigr]. \]

- Decompose the expectation: \[ \mathbb{E}_{q_{\phi}(z|x)}\Bigl[\log\tfrac{p_{\theta}(x,z)}{q_{\phi}(z|x)}\Bigr] = \mathbb{E}_{q_{\phi}(z|x)}[\log p_{\theta}(x|z)] + \mathbb{E}_{q_{\phi}(z|x)}[\log p(z)] - \mathbb{E}_{q_{\phi}(z|x)}[\log q_{\phi}(z|x)]. \]

- Recognize \[ \mathbb{E}_{q_{\phi}(z|x)}[\log p(z)] - \mathbb{E}_{q_{\phi}(z|x)}[\log q_{\phi}(z|x)] = -D_{KL}\bigl(q_{\phi}(z|x)\|p(z)\bigr). \]

- Therefore, \[ \log p_{\theta}(x) = \underbrace{\mathbb{E}_{q_{\phi}(z|x)}[\log p_{\theta}(x|z)]}_{(1)\,\text{Reconstruction}} \;-\;\underbrace{D_{KL}\bigl(q_{\phi}(z|x)\|p(z)\bigr)}_{(2)\,\text{Prior matching}} \;+\;\underbrace{D_{KL}\bigl(q_{\phi}(z|x)\|p_{\theta}(z|x)\bigr)}_{(3)\,\ge0\,,\text{+gap}}. \]

- The ELBO is terms (1) and (2): \[ \mathrm{ELBO}(\phi,\theta;x) = \mathbb{E}_{q_{\phi}(z|x)}[\log p_{\theta}(x|z)] - D_{KL}\bigl(q_{\phi}(z|x)\|p(z)\bigr). \]

- Maximizing the ELBO maximizes a lower bound on \(\log p_{\theta}(x)\).

- The VAE loss (to minimize) is the negative ELBO: \[ L_{VAE}(\phi,\theta;x) = -\mathbb{E}_{q_{\phi}(z|x)}[\log p_{\theta}(x|z)] + D_{KL}\bigl(q_{\phi}(z|x)\|p(z)\bigr). \]

Analytical KL Divergence:

- If \(q_{\phi}(z|x)=\mathcal{N}(z;\mu_{\phi}(x),\mathrm{diag}(\sigma^2_{\phi}(x)))\) and \(p(z)=\mathcal{N}(0,I)\), then \[ D_{KL}\bigl(q_{\phi}(z|x)\|p(z)\bigr) = \tfrac{1}{2}\sum_{j=1}^{D}\bigl(\sigma_j^2(x)+\mu_j^2(x) -\log\sigma_j^2(x)-1\bigr). \]

Generation After Training:

- Sample \(z\sim p(z)\) and decode via \(p_{\theta}(x|z)\).

- Without conditioning, attributes (e.g. class) are uncontrolled.

- Conditional VAEs (CVAEs): condition on labels \(y\), use \(q_{\phi}(z|x,y)\) and \(p_{\theta}(x|z,y)\) for controlled generation.

β-VAEs

β-VAEs introduce a hyperparameter β (>1) to the ELBO: \[ L_{\beta\text{-VAE}}(\phi,\theta;x) = -\mathbb{E}_{q_{\phi}(z|x)}[\log p_{\theta}(x|z)] + \beta\,D_{KL}\bigl(q_{\phi}(z|x)\|p(z)\bigr). \]

Rationale for Disentanglement:

- The KL term matches \(q_{\phi}(z|x)\) to an isotropic Gaussian \(p(z)=\mathcal{N}(0,I)\).

- Isotropy implies zero mean, unit variance, and independence across latent dimensions.

- By upweighting the KL term (β>1), β-VAEs more strongly penalize any deviation from:

- unit variance in each \(z_j\),

- zero covariance between distinct latent axes.

- This stronger pressure reduces correlations among latent dimensions, encouraging each \(z_j\) to capture an independent factor of variation.

Constrained Optimization Perspective:

- Maximizing ELBO subject to \(D_{KL}(q_{\phi}(z|x)\|p(z))\le \epsilon_0\).

- β acts as a Lagrange multiplier: larger β ↔︎ smaller ε₀ ↔︎ tighter constraint on information capacity.

- A tighter constraint forces a more compressed, factorized (disentangled) representation.

Disentanglement vs. Reconstruction Trade-off:

- Increasing β improves disentanglement but may degrade reconstruction quality.

- The model prioritizes matching \(p(z)\) over reconstructing \(x\) perfectly.

Autoregressive Models

Autoregressive (AR) models factorize a high-dimensional joint distribution into an ordered product of conditionals, enabling exact likelihood computation and principled density estimation.

General Principles

- Factorization

\[p(\mathbf{x}) = \prod_{i=1}^{D} p\bigl(x_i \mid x_{<i}\bigr),\quad x_{<i}=(x_1,\dots,x_{i-1}).\] - Naive Parameterization

- A full tabular representation of each conditional \(p(x_i \mid x_{<i})\) requires \(O(K^D)\) parameters for discrete variables with alphabet size \(K\).

- Alternatively, one could learn \(D\) independent classifiers—one per position—each with its own parameters, giving \(O(D\cdot P)\) parameters for classifier size \(P\).

- Tractable Likelihood

- Exact computation of \(p(\mathbf{x})\) permits maximum likelihood training and direct model comparison.

- Data Modalities

- Sequences (e.g. text): natural ordering

\[p(\text{token}_t \mid \text{tokens}_{<t}).\] - Images: impose scan order (raster, zigzag, diagonal); choice of ordering affects receptive field and modeling capacity.

- Sequences (e.g. text): natural ordering

- Pros & Cons

- Exact density estimation → anomaly detection, likelihood-based evaluation

- Sequential generation in \(O(D)\) steps; slow for high-dimensional \(D\)

- Ordering choice for images is non-trivial

- Comparison to Independence

- Independent model: \(p(\mathbf{x})=\prod_i p(x_i)\) ignores structure but is fast.

Early AR Models for Binary Data

Fully Visible Sigmoid Belief Net (FVSBN)

- Models \(\mathbf{x}\in\{0,1\}^D\) with fixed ordering.

- Conditional logistic regression: \[ p(x_i=1 \mid x_{<i}) = \sigma\!\Bigl(\alpha_i + \sum_{j<i}W_{ij}\,x_j\Bigr). \]

- Parameters: biases \(\{\alpha_i\}_{i=1}^D\) and weights \(W\in\mathbb{R}^{D\times D}\) (upper-triangular).

- Complexity: \(O(D^2)\) parameters, simple but parameter-inefficient.

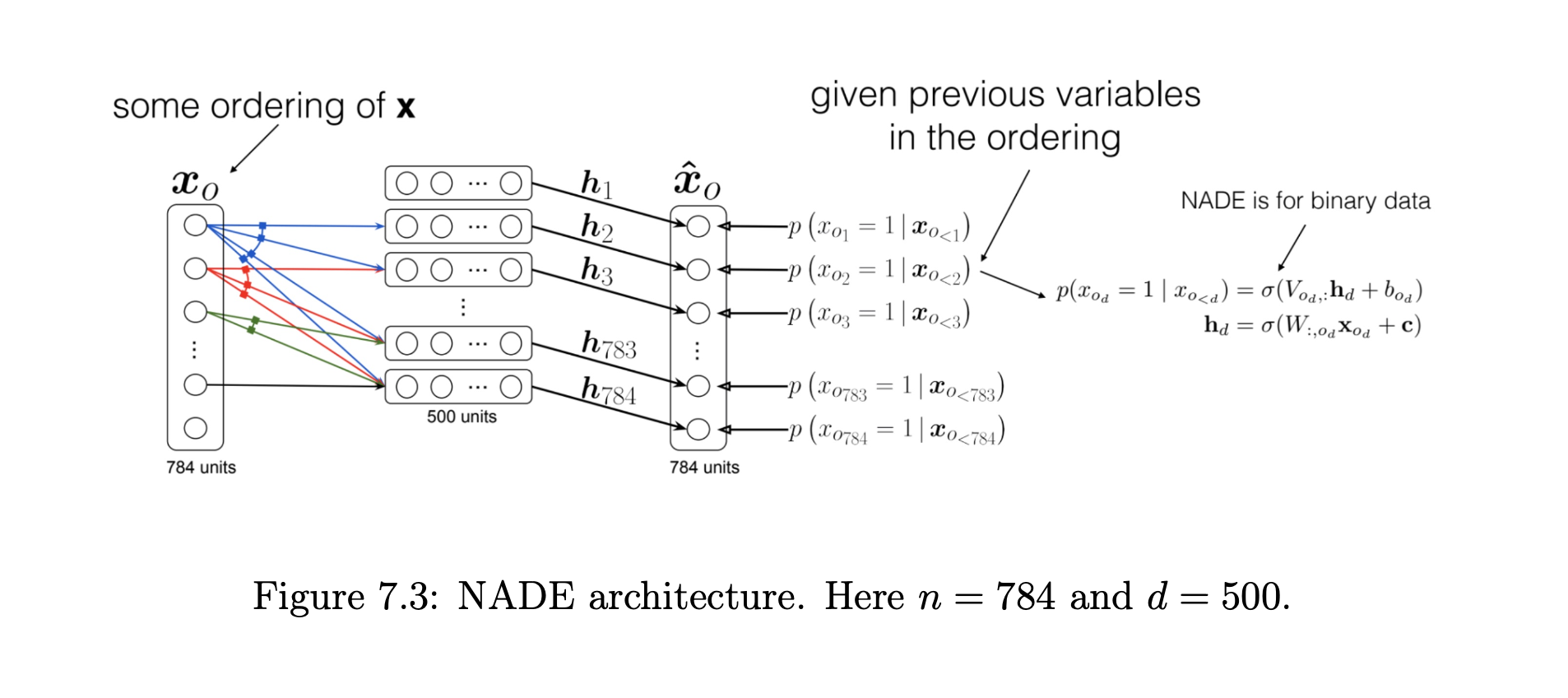

Neural Autoregressive Density Estimator (NADE)

- Shares hidden-layer parameters across all conditionals to reduce complexity from \(O(D^2)\) to \(O(Dd)\), where \(d\) is hidden size.

- Model

- Hidden activations \[ a_1 = c,\quad a_{i+1} = a_i + W_{:,\,i}\,x_i,\quad h_i = \sigma(a_i), \] where \(W\in\mathbb{R}^{d\times D}\) and \(c\in\mathbb{R}^d\) are shared.

- Conditional probability \[ p(x_i=1 \mid x_{<i}) = \hat{x}_i = \sigma\bigl(b_i + V_{i,:}\,h_i\bigr), \] with \(V\in\mathbb{R}^{D\times d}\) and \(b\in\mathbb{R}^D\).

- Properties

- Parameter count: \(O(Dd)\) vs. \(O(D^2)\) in FVSBN

- Computation: joint log-likelihood \(\sum_i\log p(x_i\mid x_{<i})\) in \(O(Dd)\) via one forward pass

- Training

- Maximize average log-likelihood using teacher forcing (use ground-truth \(x_{<i}\)).

- Extensions: RNADE (real-valued inputs), DeepNADE (deep MLP), ConvNADE.

- Inference

- Sequential sampling \(x_1,\dots,x_D\), with optional random ordering for binary pixels.

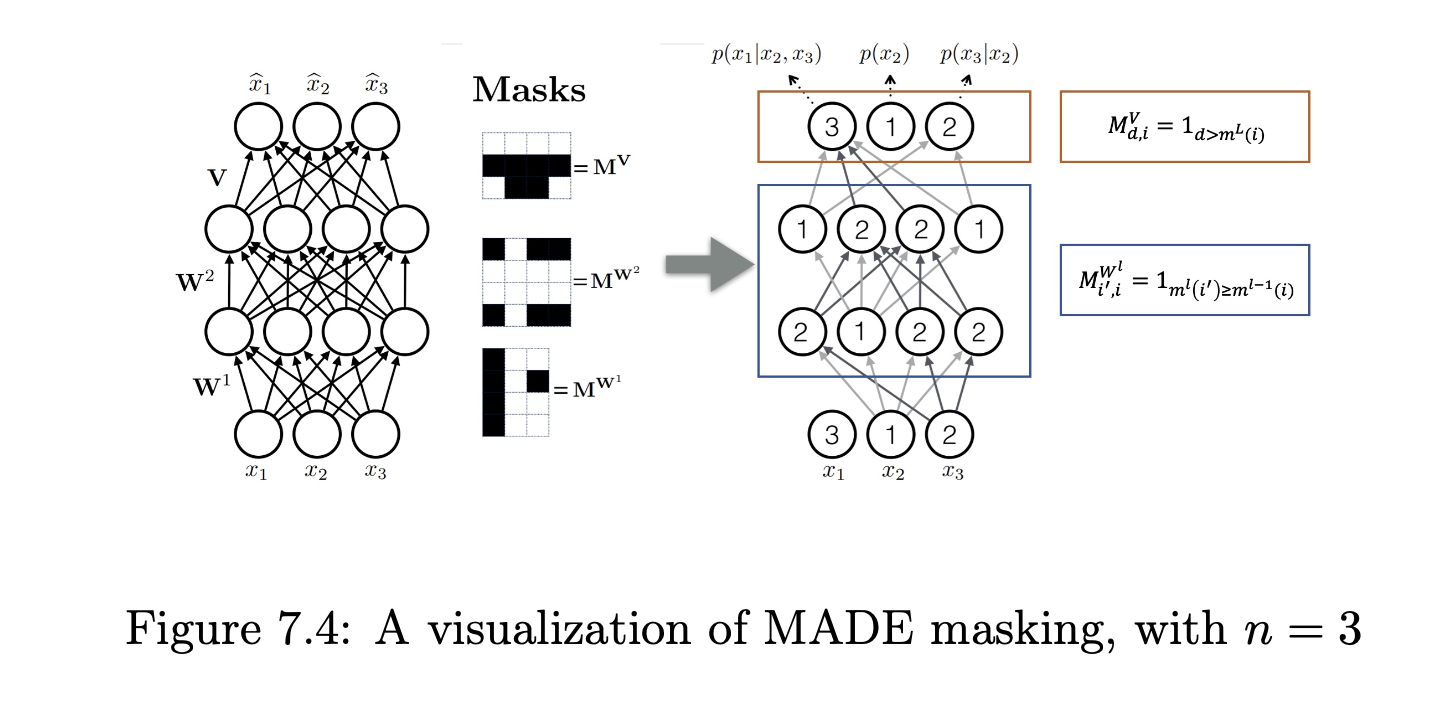

MADE (Masked Autoencoder for Distribution Estimation)

- Adapts a feed-forward autoencoder to AR modeling by masking weights to enforce \(x_i\) depends only on \(x_{<i}\).

- Masking

- Assign each unit an index \(m\in\{1,\dots,D\}\).

- A weight from unit \(u\to v\) is active only if \(m(u)<m(v)\) (hidden layers) and \(m(u)<i\) for output \(x_i\).

- Training & Generation

- Train by minimizing negative log-likelihood.

- Sequential sampling: \(D\) forward passes, one per new \(x_i\).

- Ordering Robustness

- Randomizing masks/orders per epoch improves generalization.

AR Models for Images

PixelRNN

- Uses RNNs (LSTM/GRU) over flattened pixels.

- Dependency: Each pixel \(x_i\) conditioned on RNN hidden state summarizing \(x_{<i}\).

- Ordering: Raster or diagonal scan; variants include Row LSTM, Diagonal BiLSTM.

- Generation: \(O(HW)\) sequential steps for \(H\times W\) image.

PixelCNN

- Uses masked convolutions to model \(p(x_{r,c}\mid x_{\prec(r,c)})\).

- Mask Types

- Type A (first layer): blocks current pixel.

- Type B (subsequent): allows self-connection but no future pixels.

- Training

- Parallel over all pixels via masked convolution stacks.

- Generation

- Sequential sampling \(O(HW)\) steps.

- Blind-Spot Issue

- Deep stacks can leave unconditioned “holes.” Gated PixelCNN or two-pass designs address this.

TCNs (Temporal Convolutional Networks) & WaveNet

- TCN: 1D causal convolutions ensure \(y_t\) depends only on \(x_{\le t}\).

- Dilated Convolutions

- Factor \(d\): gaps between filter taps.

- Stack dilations \(1,2,4,\dots\) for exponential receptive field growth.

- WaveNet

- Masked, dilated convolutions on raw audio.

- Models long-range audio dependencies efficiently.

Variational RNNs (VRNNs) & C-VRNNs

- VRNN

- Embeds a VAE at each timestep \(t\).

- Prior: \(p(z_t\mid h_t)=\mathcal{N}(\mu_{0,t},\mathrm{diag}(\sigma_{0,t}^2))\) where \((\mu_{0,t},\sigma_{0,t})=\phi_{\text{prior}}(h_t)\).

- C-VRNN

- Conditions on external variables (e.g. control signals) alongside latent \(z_t\).

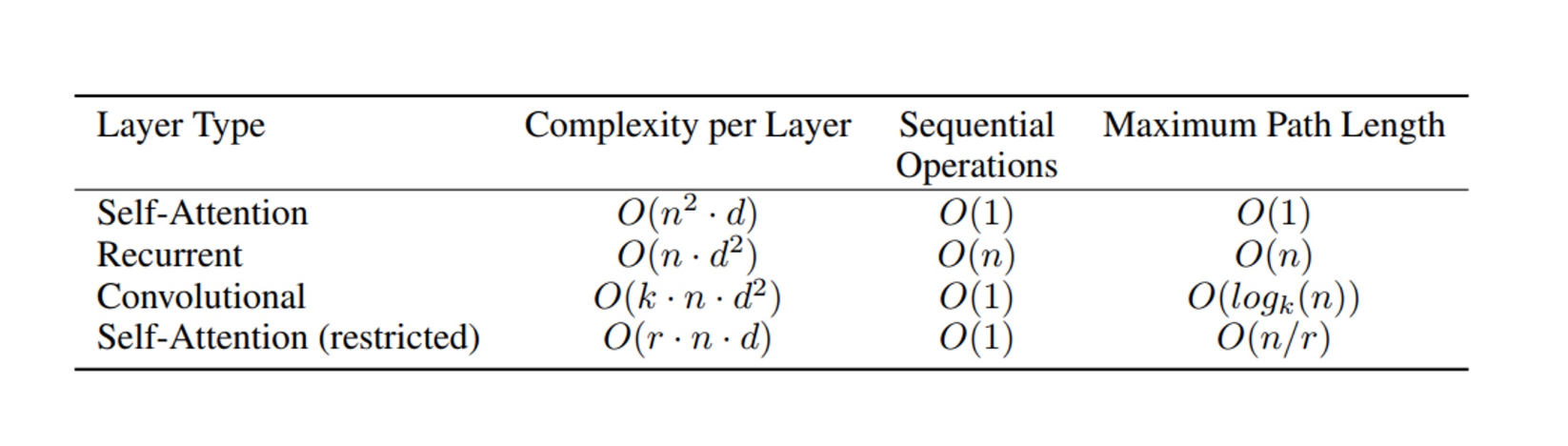

Architecture Trade-offs for \(p(x_i\mid x_{<i})\)

- RNNs

- Compress arbitrary history.

- Recency bias; vanishing distant signals.

- CNNs (Masked/Dilated)

- Parallelizable; scalable receptive field.

- Fixed context; blind spots.

- Transformers

- Masked self-attention to all \(x_{<i}\).

- Highly parallel training.

- Quadratic \(O(L^2)\) attention cost; need positional encoding.

- Large Language Models (LLMs)

- Transformer-based AR on token sequences.

- Pretraining: next-token prediction.

- Fine-Tuning: supervised tasks (SFT).

- Tokenization: subword units (BPE, WordPiece).

AR for High-Resolution Images & Video

- Challenge: Millions of pixels/voxels → \(O(D)\) sequential steps infeasible.

- Approach: Compress data into discrete latent sequences via VQ-VAE.

Vector-Quantized VAE (VQ-VAE)

Objective: Learn a discrete latent representation for high-dimensional data, enabling compact encoding and efficient autoregressive modeling.

Model Components:

- Encoder:

- A convolutional neural network (CNN) mapping input

\(\mathbf{x}\in\mathbb{R}^{H\times W\times C}\) to continuous latents

\(\mathbf{z}_e(\mathbf{x})\in\mathbb{R}^{H'\times W'\times D}\). - Spatial down-sampling factor \(s\): \(H'=H/s,\;W'=W/s\) (e.g. \(s=8\)).

- A convolutional neural network (CNN) mapping input

- Codebook:

- A collection \(\mathcal{E}=\{e_j\in\mathbb{R}^D\mid j=1,\dots,K\}\) of \(K\) trainable embedding vectors.

- Quantization:

- For each spatial cell \((p,q)\), take

\(z_e(\mathbf{x})_{p,q}\in\mathbb{R}^D\) and find nearest codebook index \[ k_{p,q} = \arg\min_{j\in\{1..K\}} \bigl\|z_e(\mathbf{x})_{p,q} - e_j\bigr\|_2^2. \] - Replace \(z_e(\mathbf{x})_{p,q}\) with \(e_{k_{p,q}}\), yielding discrete latents

\(\mathbf{z}_q(\mathbf{x})\in\mathbb{R}^{H'\times W'\times D}\).

- For each spatial cell \((p,q)\), take

- Decoder:

- A CNN mapping \(\mathbf{z}_q(\mathbf{x})\) back to a reconstruction

\(\hat{\mathbf{x}}\in\mathbb{R}^{H\times W\times C}\).

- A CNN mapping \(\mathbf{z}_q(\mathbf{x})\) back to a reconstruction

- Encoder:

Loss Terms:

- Reconstruction Loss: \[ L_{\mathrm{rec}} = \bigl\|\mathbf{x} - \hat{\mathbf{x}}\bigr\|_2^2. \]

- Codebook Loss: (fix encoder, update codebook) \[ L_{\mathrm{cb}} = \sum_{p,q} \bigl\|\mathrm{sg}\bigl[z_e(\mathbf{x})_{p,q}\bigr] - e_{k_{p,q}}\bigr\|_2^2. \]

- Commitment Loss: (fix codebook, update encoder) \[ L_{\mathrm{com}} = \beta \sum_{p,q} \bigl\|z_e(\mathbf{x})_{p,q} - \mathrm{sg}\bigl[e_{k_{p,q}}\bigr]\bigr\|_2^2, \] where \(\beta\) (e.g. \(0.25\)) balances encoder commitment, and \(\mathrm{sg}[\cdot]\) is stop‐gradient.

- Total Loss: \[ L_{\mathrm{VQ\text{-}VAE}} = L_{\mathrm{rec}} + L_{\mathrm{cb}} + L_{\mathrm{com}}. \]

Optimization Details:

- Straight-Through Estimator (STE):

- Quantization is non-differentiable.

- In backprop, pass gradients from \(\mathbf{z}_q\) directly to \(\mathbf{z}_e\) (i.e. treat quantization as identity).

- Codebook Updates via EMA (alternatively):

- Maintain per‐embedding counts \(N_j\) and sums \(m_j\): \[ N_j \gets \gamma\,N_j + (1-\gamma)\sum_{p,q}\mathbf{1}[k_{p,q}=j], \] \[ m_j \gets \gamma\,m_j + (1-\gamma)\sum_{p,q:k_{p,q}=j} z_e(\mathbf{x})_{p,q}, \] then update \[ e_j \gets \frac{m_j}{N_j}, \] with decay \(\gamma\approx0.99\).

- Straight-Through Estimator (STE):

Practical Considerations:

- Hyperparameters:

- Codebook size \(K\) (e.g. 512–1024), embedding dimension \(D\) (e.g. 64–256).

- Compression factor \(s\) reduces sequence length from \(HW\) to \(H'W'\).

- Downstream Use:

- After training, the discrete codes \(\{k_{p,q}\}\) form a sequence of length \(H'W'\).

- An autoregressive model (e.g. Transformer) can be trained on these code sequences:

\[p(k_{p,q}\mid k_{<p,q}).\]

- Intuition:

- Each \(e_j\) becomes a “prototype” latent vector.

- The encoder emits a continuous vector, which is snapped to its nearest prototype, enforcing discreteness and preventing posterior collapse.

- Hyperparameters:

Generative Adversarial Networks (GANs)

Introduction

- Origin and Context

- GANs were introduced by Goodfellow et al. in 2014.

- They became widely adopted and studied around 2016.

- Implicit Generative Models

- GANs are implicit generative models: they learn to represent a data distribution \(p_{data}(x)\) through a generative process, rather than by explicitly modeling the probability density function.

- The model does not require an explicit likelihood function.

Limitations of Likelihood-based Methods

- Challenges in Likelihood Estimation

- Maximizing likelihood does not guarantee high-quality or representative samples.

- A model may assign high likelihood to noisy or outlier regions, skewing the overall likelihood score, even if it fails to capture the main modes of the data.

- Maximizing likelihood does not guarantee high-quality or representative samples.

- Paradoxical Outcomes

- Models can achieve high likelihood scores while generating poor-quality samples.

- Conversely, models that generate visually realistic samples may have poor log-likelihood scores.

- Memorization vs. Generalization

- Likelihood-based models may overfit and memorize the training data, achieving high likelihood on the training set but failing to generalize and generate novel samples.

GANs as Implicit Generative Models

- Key Characteristics

- Likelihood-free: GANs do not require explicit definition or optimization of a likelihood function \(p(x)\).

- Architectural Flexibility: The generator and discriminator can be implemented using various neural network architectures (e.g., MLP, CNN, RNN).

- Transformation: GANs map low-dimensional random noise vectors \(z\) to high-dimensional structured data samples \(G(z)\).

- Sample Quality: GANs often produce sharper and more realistic samples than Variational Autoencoders (VAEs), especially for images.

- Composability: GANs can be integrated with other generative models (e.g., VAEs, Normalizing Flows, Diffusion Models) to potentially improve sample quality or training stability.

Architecture Components

- Generator Network (\(G\))

- Input: Latent vector \(z\) sampled from a prior distribution \(p_z(z)\) (e.g., standard Gaussian \(\mathcal{N}(0, I)\) or Uniform \(U[-1, 1]\)).

- Output: Synthetic data sample \(x_{fake} = G(z)\), in the same space as the real data.

- Objective: Generate samples \(G(z)\) that are indistinguishable from real data samples \(x \sim p_{data}(x)\).

- Discriminator Network (\(D\))

- Input: Data sample \(x\), which can be either a real sample (\(x \sim p_{data}(x)\)) or a fake sample (\(x = G(z)\)).

- Output: Scalar probability \(D(x) \in [0, 1]\), representing the estimated probability that \(x\) is a real sample from \(p_{data}(x)\).

- Objective: Accurately classify inputs as real (\(D(x) \to 1\)) or fake (\(D(G(z)) \to 0\)).

Training Dynamics

- Adversarial Framework

- The process is a zero-sum, two-player minimax game between \(G\) and \(D\).

- \(G\) tries to fool \(D\) by generating increasingly realistic samples.

- \(D\) tries to improve its ability to distinguish real samples from fake ones generated by \(G\).

- Training involves backpropagating gradients: \(D\)’s gradients guide its own learning, and also flow back through \(D\) (when frozen) to train \(G\).

- The process is a zero-sum, two-player minimax game between \(G\) and \(D\).

- Mathematical Formulation

- The core value function \(V(G, D)\) defines the minimax objective: \[ \min_G \max_D V(G, D) = \mathbb{E}_{x \sim p_{data}(x)}[\log D(x)] + \mathbb{E}_{z \sim p_z(z)}[\log(1 - D(G(z)))] \]

- Optimization

- \(D\) is trained to maximize \(V(G, D)\) (maximize classification accuracy).

- \(G\) is trained to minimize \(V(G, D)\) (minimize the log-probability of \(D\) being correct about fake samples).

- Theoretical Equilibrium (Nash Equilibrium)

- If \(G\) and \(D\) have sufficient capacity and training converges optimally:

- The generator’s distribution \(p_g\) matches the real data distribution \(p_{data}\).

- The discriminator outputs \(D(x) = 1/2\) everywhere, unable to distinguish real from fake.

- Assumptions: This requires strong assumptions like infinite model capacity and the ability to find the Nash equilibrium, which are often not met in practice.

- If \(G\) and \(D\) have sufficient capacity and training converges optimally:

Practical Training Algorithm

- Alternating Optimization

- Simultaneous gradient descent for minimax games is unstable, so training typically alternates:

- Train Discriminator: Freeze \(G\), update \(D\) for one or more (\(k\)) steps by ascending its gradient: \(\nabla_D V(G, D)\).

- Train Generator: Freeze \(D\), update \(G\) for one step by descending its gradient: \(\nabla_G V(G, D)\).

- Repeat.

- Often \(k > 1\) (e.g., \(k=5\)) to ensure \(D\) remains relatively well-optimized.

- Simultaneous gradient descent for minimax games is unstable, so training typically alternates:

- Training Considerations

- Balance: Crucial to maintain balance between \(G\) and \(D\). If \(D\) becomes too strong too quickly, gradients for \(G\) can vanish. If \(D\) is too weak, \(G\) receives poor guidance.

- Computational Cost: Training is computationally intensive due to the need to train two networks and potentially perform multiple discriminator updates per generator update.

Proposition 1: Optimal Discriminator

For a fixed generator \(G\), the optimal discriminator \(D^*(x)\) that maximizes \(V(G, D)\) is:

\[ D^*(x) = \frac{p_{data}(x)}{p_{data}(x) + p_g(x)} \]

where \(p_g(x)\) is the probability density of the samples generated by \(G\).

Derivation:

- The discriminator’s objective is: \[ \max_D \mathbb{E}_{x \sim p_{data}}[\log D(x)] + \mathbb{E}_{z \sim p_z}[\log(1 - D(G(z)))] \]

- By the law of the unconscious statistician, this can be rewritten as: \[ \max_D \int_x p_{data}(x) \log D(x) dx + \int_x p_g(x) \log(1 - D(x)) dx \]

- For each \(x\), maximize \(a \log y + b \log(1-y)\) with \(a = p_{data}(x)\), \(b = p_g(x)\), \(y = D(x)\).

- The maximum is at \(y^* = \frac{a}{a+b}\), so: \[ D^*(x) = \frac{p_{data}(x)}{p_{data}(x) + p_g(x)} \]

Proposition 2: GANs Minimize Jensen-Shannon Divergence

- When \(D\) is optimal (\(D^*\)), the training objective for \(G\) corresponds to minimizing the Jensen-Shannon (JS) divergence between \(p_{data}\) and \(p_g\).

- Kullback-Leibler (KL) Divergence: \[ D_{KL}(P || Q) = \int P(x) \log \frac{P(x)}{Q(x)} dx \]

- Jensen-Shannon (JS) Divergence: \[ D_{JS}(P || Q) = \frac{1}{2} D_{KL}(P || M) + \frac{1}{2} D_{KL}(Q || M) \] where \(M = \frac{1}{2}(P + Q)\). \(0 \le D_{JS}(P || Q) \le \log 2\).

- Proof Sketch:

- Substitute \(D^*(x)\) into \(V(G, D)\): \[ \begin{aligned} C(G) &= \max_D V(G, D) = V(G, D^*) \\ &= \mathbb{E}_{x \sim p_{data}}[\log D^*(x)] + \mathbb{E}_{x \sim p_g}[\log(1 - D^*(x))] \\ &= \mathbb{E}_{x \sim p_{data}}\left[\log \frac{p_{data}(x)}{p_{data}(x) + p_g(x)}\right] + \mathbb{E}_{x \sim p_g}\left[\log \frac{p_g(x)}{p_{data}(x) + p_g(x)}\right] \\ &= \int p_{data}(x) \log \frac{p_{data}(x)}{2 \cdot \frac{1}{2}(p_{data}(x) + p_g(x))} dx + \int p_g(x) \log \frac{p_g(x)}{2 \cdot \frac{1}{2}(p_{data}(x) + p_g(x))} dx \\ &= \int p_{data}(x) \left( \log \frac{p_{data}(x)}{m(x)} - \log 2 \right) dx + \int p_g(x) \left( \log \frac{p_g(x)}{m(x)} - \log 2 \right) dx \\ &= (D_{KL}(p_{data} || m) - \log 2) + (D_{KL}(p_g || m) - \log 2) \\ &= D_{KL}(p_{data} || m) + D_{KL}(p_g || m) - 2 \log 2 \\ &= 2 D_{JS}(p_{data}, p_g) - 2 \log 2 \end{aligned} \]

- Minimizing \(C(G)\) with respect to \(G\) is equivalent to minimizing \(D_{JS}(p_{data}, p_g)\), as \(-2 \log 2\) is a constant.

- The minimum value of \(D_{JS}\) is \(0\), achieved if and only if \(p_{data} = p_g\).

Practical Training Algorithm (continued)

- The theoretical analysis assumes \(D\) can always reach its optimum \(D^*\) for any \(G\), but in practice, \(D\) is only optimized for a finite number of steps (\(k\)), and this inner-loop optimization is computationally intensive and can lead to overfitting on finite datasets.

- Alternating optimization (typically \(k \in \{1, ..., 5\}\) steps for \(D\) per \(G\) update) is used to keep \(D\) near optimal while allowing \(G\) to change slowly.

Issues with Training

- Vanishing Gradients

- Problem: When \(D\) is very confident (outputs close to 0 or 1) and accurate, the gradient \(\log(1 - D(G(z)))\) for \(G\) can become very small (saturate), especially early in training when \(G\) produces poor samples easily distinguishable by \(D\). This stalls the learning of \(G\).

- Solution (Non-Saturating Loss): Instead of minimizing \(\mathbb{E}_{z \sim p_z(z)}[\log(1 - D(G(z)))]\), the generator maximizes \(\mathbb{E}_{z \sim p_z(z)}[\log D(G(z))]\). This provides stronger gradients early in training. The generator’s objective becomes: \[ \mathcal{L}_G = - \mathbb{E}_{z \sim p_z(z)}[\log D(G(z))] \]

- Mode Collapse

- Problem: The generator \(G\) learns to produce only a limited variety of samples, collapsing many modes of the true data distribution \(p_{data}\) into one or a few output modes. \(G\) finds a few samples that can easily fool the current \(D\), and stops exploring.

- Potential Causes: \(G\) finds a local minimum in the adversarial game.

- Mitigation Strategies:

- Architectural changes

- Alternative loss functions (e.g., Wasserstein GAN, which uses the Wasserstein distance as a similarity measure between distributions, providing smoother gradients and better mode coverage)

- Unrolled GANs: The generator is optimized with respect to the state of \(D\) after the next \(k\) steps, discouraging \(G\) from exploiting local minima.

- Mini-batch discrimination and gradient accumulation can also help.

- Training Instability

- Problem: The adversarial training process involves finding a Nash equilibrium, which is inherently more unstable than standard minimization problems. Updates improving one player (\(G\) or \(D\)) might worsen the objective for the other, leading to oscillations or divergence.

- Mitigation Strategies:

- Careful hyperparameter tuning

- Architectural choices (e.g., Spectral Normalization)

- Gradient penalties (e.g., WGAN-GP adds a penalty term related to the norm of the discriminator’s gradient with respect to its input, often evaluated at points interpolated between real and fake samples: \(\|\nabla_{\hat{x}} D(\hat{x})\|_2\)).

- Explanation: In WGAN-GP, the gradient penalty enforces the Lipschitz constraint by penalizing the squared deviation of the gradient norm from 1: \[ \lambda \mathbb{E}_{\hat{x}} \left( \|\nabla_{\hat{x}} D(\hat{x})\|_2 - 1 \right)^2 \] where \(\hat{x}\) is sampled uniformly along straight lines between pairs of points from the real and generated data distributions.

StyleGANs

- Overview

- StyleGANs are a family of GAN architectures known for generating high-resolution, high-fidelity images (e.g., 1024x1024 faces).

- Key Innovations

- Progressive Growing (introduced in PGGAN, adopted by StyleGAN)

- Training starts with low-resolution images (e.g., 4x4).

- New layers are progressively added to both \(G\) and \(D\) to increase the resolution (e.g., 8x8, 16x16, …), fine-tuning the network at each stage. This stabilizes training for high resolutions.

- Style-Based Generator

- Mapping Network: A separate network (e.g., an MLP) maps the initial latent code \(z \sim p_z(z)\) to an intermediate latent space \(W\). This disentangles the latent space. \(w \in W\).

- Adaptive Instance Normalization (AdaIN): The intermediate latent \(w\) is transformed into style parameters (scale \(\mathbf{y}_s\) and bias \(\mathbf{y}_b\)) which modulate the activations at each layer of the synthesis network. \[ \text{AdaIN}(x_i, w) = \mathbf{y}_{s,i} \frac{x_i - \mu(x_i)}{\sigma(x_i)} + \mathbf{y}_{b,i} \] where \(x_i\) are the activations of the \(i\)-th feature map, \(\mu(x_i)\) and \(\sigma(x_i)\) are the mean and standard deviation, and \(\mathbf{y}_{s,i}, \mathbf{y}_{b,i}\) are learned affine transformations applied to \(w\).

- Constant Input & Noise Injection: The synthesis network starts from a learned constant tensor instead of the latent code directly. Stochasticity (e.g., hair placement, freckles) is introduced by adding learned per-pixel noise at each layer, scaled by learned factors.

- Hierarchical Control: Injecting \(w\) (via AdaIN) at different layers controls different levels of attributes: early layers affect coarse features (pose, shape), while later layers affect finer details (color scheme, micro-textures).

- Progressive Growing (introduced in PGGAN, adopted by StyleGAN)

Image-to-Image Translation

- Task: Learn a mapping function to translate an image from a source domain A to a target domain B (e.g., segmentation maps to photos, sketches to photos, day to night).

- Pix2Pix

- A conditional GAN (cGAN) approach where the generator is conditioned on the input image from domain A. \(G: A \to B\).

- Requires Paired Data: Training needs datasets where each image \(x_A\) has a corresponding ground truth image \(x_B\).

- Loss Function: Combines a conditional GAN loss (discriminator \(D\) tries to distinguish real pairs \((x_A, x_B)\) from fake pairs \((x_A, G(x_A))\)) with a reconstruction loss, typically L1 loss: \[ \mathcal{L}_{L1}(G) = \mathbb{E}_{x_A, x_B}[||x_B - G(x_A)||_1] \] L1 loss is often preferred over L2 (MSE) as it encourages less blurring in the generated images.

- Architecture: Often uses a “U-Net” architecture for the generator and a “PatchGAN” discriminator (classifies patches of the image as real/fake).

- CycleGAN

- Designed for Unpaired Data: Can learn translations when there is no direct one-to-one mapping between images in domain A and domain B (e.g., translating photos of horses to zebras, or photographs to Monet paintings).

- Architecture: Uses two generators (\(G_{A \to B}\) and \(G_{B \to A}\)) and two discriminators (\(D_A\) and \(D_B\)).

- \(G_{A \to B}\): Translates A to B. \(D_B\): Distinguishes real B images from generated \(\hat{x}_B = G_{A \to B}(x_A)\).

- \(G_{B \to A}\): Translates B to A. \(D_A\): Distinguishes real A images from generated \(\hat{x}_A = G_{B \to A}(x_B)\).

- Cycle Consistency Loss: Ensures that translating an image from A to B and back to A should recover the original image (and vice-versa). \[ \mathcal{L}_{cyc}(G_{A \to B}, G_{B \to A}) = \mathbb{E}_{x_A}[||G_{B \to A}(G_{A \to B}(x_A)) - x_A||_1] + \mathbb{E}_{x_B}[||G_{A \to B}(G_{B \to A}(x_B)) - x_B||_1] \]

- Total Loss: Sum of the adversarial losses for both mappings and the cycle consistency loss. \[ \mathcal{L}_{total} = \mathcal{L}_{GAN}(G_{A \to B}, D_B) + \mathcal{L}_{GAN}(G_{B \to A}, D_A) + \lambda \mathcal{L}_{cyc}(G_{A \to B}, G_{B \to A}) \] where \(\lambda\) is a hyperparameter controlling the cycle consistency term.

Other Applications & Extensions

- Video-to-Video Translation (Vid2Vid)

- Extends image translation to video, requiring temporal consistency across generated frames.

- 3D GANs

- Generate 3D shapes or scenes, often represented as voxel grids, point clouds, or implicit neural representations.

Normalizing Flows (NFs)

Core Concepts

Definition

- Normalizing Flows (NFs) are generative models that represent a complex data distribution \(p(x)\) by transforming a simple base distribution \(p_Z(z)\) (e.g., Gaussian) through an invertible and differentiable function \(f: \mathcal{Z} \to \mathcal{X}\), where \(x = f(z)\).

Tractable Likelihood

- NFs allow exact likelihood computation using the change of variables formula. Given \(z = f^{-1}(x)\): \[ p_X(x) = p_Z(z) \left| \det \left( \frac{\partial z}{\partial x} \right) \right| = p_Z(f^{-1}(x)) \left| \det J_{f^{-1}}(x) \right| \]

- Alternatively, by the inverse function theorem (\(J_f(z) = (J_{f^{-1}}(x))^{-1}\)): \[ p_X(x) = p_Z(z) \left| \det J_f(z) \right|^{-1} \]