Part 3 - Week 3

Privacy, Memorization, Differential Privacy

- Data as a Resource and Privacy Risks

Data is increasingly valuable, requiring caution when inputting information into systems like chatbots (e.g., Gboard or ChatGPT), as it may be used for further training. This includes personal data or company secrets. Even without explicit leaks or misuse, aggregating data in one searchable repository poses risks, such as enabling reverse image search for facial recognition. Modern image models also excel at geoguessing (inferring locations from images), amplifying privacy concerns.

Desired Privacy Definitions for Language Models

Power Dynamics in Training

Model trainers have access to all data, creating insecurity even if not misused. The goal is to avoid trusting the recipient by sending data in a protected form, akin to encryption.Secure Computation Techniques

- Secure Multiparty Computation (SMC): Allows multiple parties to compute jointly on private inputs without revealing them.

- Homomorphic Encryption (HE): Enables computations directly on encrypted data, yielding encrypted results.

- These methods are computationally slow and expensive, limiting scalability for large models.

Pragmatic Solutions

- Confidential computing platforms use hardware with encrypted memory and attestation mechanisms to prove security. These are reasonably efficient but require trust in hardware manufacturers (e.g., Intel SGX or AMD SEV).

Learning on Encoded Data

Non-Cryptographic Approaches

- Techniques like data scrambling (not true encryption) are often vague and ineffective in practice.

- Federated Learning: Distributes training across decentralized devices, keeping data local; primarily for computational efficiency rather than privacy, though it offers some protection.

- Vulnerabilities: Gradients can leak data, as they are functions of inputs; demonstrated effectively on images.

- Malicious models can be crafted to extract exact inputs from gradients.

- InstaHide and TextHide: Attempt to privatize images/text via linear mixing, scaling, and sign flipping; preserves learning utility but is reversible, thus ineffective.

Finetuning Scenarios

- When finetuning public models (e.g., on Hugging Face), gradients encode training data, persisting through updates. Techniques exploit this in transformers, with implications for privacy guarantees like Differential Privacy (DP).

Cryptographically Secure but Expensive Methods

- Represent the Neural Network (NN) as a computation graph with operations like addition (\(+\)), multiplication (\(\times\)), and activations (e.g., ReLU).

- Design HE schemes supporting these operations, allowing training on encrypted data.

- Extremely costly, especially for nonlinearities, making it impractical for large-scale models.

Data Memorization and Extraction

Challenges Beyond Training Privacy

Even with private training, releasing the decrypted model may reveal training data, particularly in generative models.Memorization in Pretrained Models

- Base models can predict sensitive information (e.g., completing names from addresses) or regurgitate copyrighted content.

- Modern chatbots are trained to avoid leaks, but safeguards are not foolproof.

Extraction Techniques

- Black-Box Access: Generate large amounts of data and apply Membership Inference Attacks (MIAs) to identify abnormally confident outputs, indicating memorization.

- Random Prompt Search: Probe for substrings matching internet content; base models leak ~1% verbatim web copies, increasing with model size.

- Chatbot-Specific Attacks: Simpler prompts fail, but advanced methods (e.g., instructing repetition of a word) can revert models to base states, outputting random training data.

- Finetuning Attacks: Simulate base-model conversations in user-assistant schemas (e.g., as offered by OpenAI) to extract more memorized content.

Training LLMs with Privacy

- Heuristic Defenses

- Filter outputs matching training data subsets.

- Bloom filters in tools like GitHub Copilot detect and halt generation of famous functions from headers, but can be bypassed to produce copyrighted code and reveal training set details.

- Deduplicate training data to reduce memorization of repeated text, improving robustness.

Differential Privacy

Principled Approach to Prevent Leakage

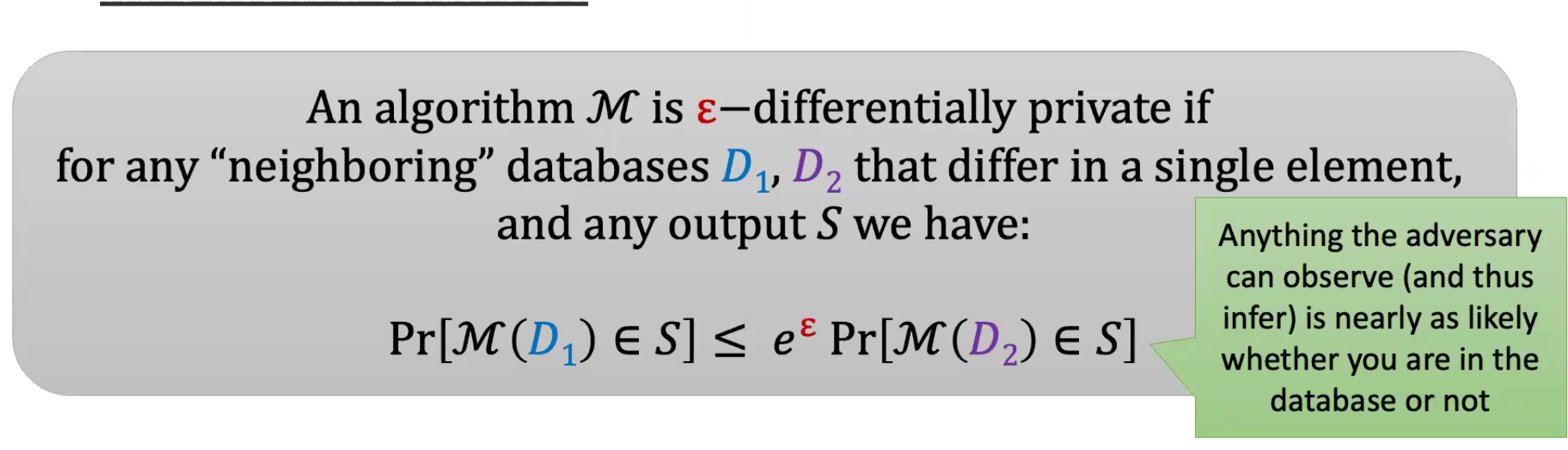

DP provides a formal framework for privacy, balancing learning from data with individual protections. Unlike cryptographic zero-knowledge proofs, DP allows statistical insights while bounding information about individuals.Core Definition

A mechanism is \(\epsilon\)-DP if, for neighboring datasets \(D_1\) and \(D_2\) (differing by one record), the output distributions are close: any information about an individual is nearly as likely whether or not they are in the dataset. This prevents inferring specifics about individuals, focusing on group statistics (e.g., in medical studies).Illustration

Simple Example: Private Sum Computation

For surveys, add noise to approximate the true sum without reconstructing individual responses.- Sensitivity: \(\delta f = \max_{D_1, D_2} |f(D_1) - f(D_2)|\), where \(D_1, D_2\) are neighboring datasets.

- Release \(y \sim \text{Laplace}(f(D), \delta f / \epsilon)\), where Laplace noise has quicker tail decay than Gaussian.

Properties

- Post-Processing: If \(M\) is \(\epsilon\)-DP, any function of \(M\)’s output is also \(\epsilon\)-DP.

- Composition: If \(M_1\) is \(\epsilon_1\)-DP and \(M_2\) is \(\epsilon_2\)-DP, their composition is \((\epsilon_1 + \epsilon_2)\)-DP; privacy degrades but scales linearly.

DP for Machine Learning

- Use Differentially Private Stochastic Gradient Descent (DP-SGD): Add noise to gradient sums.

- Clip gradients to bound sensitivity (e.g., max norm \(C\)), then add Gaussian noise for relaxed \((\epsilon, \delta)\)-DP.

- For \(n\) steps, composition yields up to \(n\epsilon\)-DP, improvable to \(O(\sqrt{n} \epsilon)\) with advanced theorems.

- Practical challenges: High noise often degrades performance for full training.

Web-Scale Pretraining and Privacy for Free

Effective Strategy

Pretrain on public data, then fine-tune with DP on private data; leverages transfer learning for noise robustness.Granularity Considerations

Define privacy units (e.g., word, sentence, document, or group chat) to balance utility and protection.Alternatives to Private Finetuning

Use zero/few-shot in-context learning for privacy; effective if tasks align with pretraining, but limited for divergent domains.