Measure Theory Recap

- It is not trivial to define a language model over an uncountable set of sequences.

- We need some measure-theoretic tools to avoid paradoxes.

- Most technical papers aren’t rigorous enough and assume things will work out.

- \(\Omega\) is the sample space (event space), \(\mathcal{F}\) is the sigma-algebra (measurable sets), \(\mathbb{P}\) is the probability measure.

- A probability space is a triple \((\Omega, \mathcal{F}, \mathbb{P})\) where:

- Definition: Sigma-algebra. \(\mathcal{F} \subseteq 2^\Omega\) is a sigma-algebra if:

- \(\emptyset \in \mathcal{F}\).

- \(A \in \mathcal{F} \implies A^c \in \mathcal{F}\).

- \(A_1, A_2, \ldots\) is a countable collection in \(\mathcal{F} \implies \bigcup_{i=1}^\infty A_i \in \mathcal{F}\).

- Examples: The trivial sigma-algebra \(\{\emptyset, \Omega\}\) or the whole power set (discrete sigma-algebra).

- Definition: Sigma-algebra. \(\mathcal{F} \subseteq 2^\Omega\) is a sigma-algebra if:

- Definition: Probability measure over a measurable space \((\Omega, \mathcal{F})\) is a function \(\mathbb{P}: \mathcal{F} \to [0, 1]\) such that:

- \(\mathbb{P}(\Omega) = 1\).

- If \(A_1, A_2, \ldots\) are countable disjoint sets in \(\mathcal{F}\), then \(\mathbb{P}(\bigcup_{i=1}^\infty A_i) = \sum_{i=1}^\infty \mathbb{P}(A_i)\), which is called sigma-additivity.

- We usually cannot have \(\mathcal{F} = 2^\Omega\) because then it is provably impossible to define a probability measure that would have desired properties.

- Definition: Algebra. Like a sigma-algebra but only with finite unions.

- Definition: Probability pre-measure. Like a probability measure but the sigma-additivity has to hold only for unions that are in the algebra (since it’s defined over an algebra).

- Carathéodory’s extension theorem: Allows us to construct simply an algebra over the set with a pre-measure and then extend it to a sigma-algebra while making this extension minimal and unique.

- Definition: Random variable. A function \(X: \Omega \to \mathbb{R}\) between two measurable spaces \((\Omega, \mathcal{F})\) and \((\mathbb{R}, \mathcal{B})\) (Borel sigma-algebra) is a random variable or a measurable mapping if for all sets \(B \in \mathcal{B}\), we have \(X^{-1}(B) \in \mathcal{F}\).

- Each random variable defines a new pushforward probability measure \(\mathbb{P}_X\) on \((\mathbb{R}, \mathcal{B})\) such that \(\mathbb{P}_X(B) = \mathbb{P}(X^{-1}(B))\) for all \(B \in \mathcal{B}\).

Language Models: Distributions over Strings

- Inspiration from formal language theory which works with sets of structures, the simplest of which is a string.

- Alphabet: Finite non-empty set of symbols \(\Sigma\).

- A string over an alphabet \(\Sigma\) is a finite sequence of symbols from \(\Sigma\), denoted as \(s = s_1 s_2 \ldots s_n\) where \(s_i \in \Sigma\).

- A string can be called a word.

- \(|s|\) is the length of the string.

- Only one string of length 0, the empty string \(\epsilon\).

- New strings are formed by concatenation, e.g., \(s_1 s_2\) is a string of length \(|s_1| + |s_2|\), which is an associative operation.

- Concatenation with \(\epsilon\) is the identity operation, aka \(\epsilon\) is the unit and the operation is monoidal.

- Kleene star: \(\Sigma^* = \bigcup_{n=0}^\infty \Sigma^n\) where \(\Sigma^n = \Sigma \times \Sigma \times \ldots \times \Sigma\) (\(n\) times).

- We also define \(\Sigma^+ = \bigcup_{n=1}^\infty \Sigma^n\), which is the set of all non-empty strings over \(\Sigma\).

- We also define the set of all infinite strings over \(\Sigma\) as \(\Sigma^\infty = \Sigma \times \Sigma \times \ldots\) (infinitely many times).

- In computer science (CS), strings are usually finite (not everywhere).

- Kleene star contains all finite strings, including the empty string, and is countably infinite.

- The set of infinite strings is uncountable for alphabets with more than one symbol.

- Definition: Language. Let \(\Sigma\) be an alphabet, then a language over \(\Sigma\) is a subset of \(\Sigma^*\).

- If not specified, we usually assume the language is the whole Kleene star.

- Subsequence: A string \(s\) is a subsequence of a string \(t\) if we can obtain \(s\) by deleting some symbols from \(t\) without changing the order of the remaining symbols.

- Substring: A contiguous subsequence.

- Prefix and suffix are special cases of substrings that share the start or end of the string.

- Prefix notation: \(y_{<n}\) is a prefix of length \(n-1\) of a string \(y\).

- \(y \triangleleft y'\) to denote that \(y\) is a suffix of \(y'\).

Definition of a Language Model

- Now we can write the definition of a language model.

- Definition: \(\Sigma\) is an alphabet, then a language model is a discrete distribution \(p_\mathrm{LM}\) over \(\Sigma^*\).

- Definition: Weighted language is \(L(p_\mathrm{LM}) = \{(s, p_\mathrm{LM}(s)) \mid s \in \Sigma^* \}\).

- We haven’t yet said how to represent such a distribution.

Global and Local Normalization

- By definition, a language model is always tight.

- So by non-tight language models that appear often in practice, we mean that the distribution is not normalized, so it’s technically not a language model, and “tight” acts as a non-intersective adjective.

- These non-tight models do appear, for example, from recurrent neural networks (RNNs) trained from data.

- Depending on whether we model the probability of the whole string or the next symbol, we can have global or local normalization.

- BOS token is a special token that indicates the start of a sequence, so it’s gonna be \(y_0\).

- Augmented alphabet: \(\bar{\Sigma} = \Sigma \cup \{\mathrm{EOS}\}\).

- And also Kleene star of this set is \(\bar{\Sigma}^*\).

- Models that are leaking probability mass to infinite sequences will be called sequence models.

Globally Normalized Language Model or Energy Model

- Definition: Energy function \(\hat{p}: \Sigma^* \to \mathbb{R}\).

- Inspired from statistical physics, an energy function can be used to define a probability distribution by exponentiating its negative value.

- Definition: Globally normalized language model is a function \(p_\mathrm{LM}: \Sigma^* \to [0, 1]\) such that: \[p_\mathrm{LM}(s) = \frac{e^{-\hat{p}(s)}}{\sum_{s' \in \Sigma^*} e^{-\hat{p}(s')}}.\]

- They are easy to define, but hard to compute the normalization constant.

- Definition: Normalizable energy function has a finite normalizer; if not, then it’s ill-defined.

- Any normalizable energy function induces a language model over \(\Sigma^*\).

- In general, it’s undecidable if it’s normalizable; even if so, it can be computationally impossible; it’s also hard to sample from the distribution.

Locally Normalized Models

- We have to know when the termination ends: \(\bar{\Sigma} = \Sigma \cup \{\mathrm{EOS}\}\).

- Definition: (Locally normalized) sequence model is a function \(p_\mathrm{SM}\) over an alphabet \(\Sigma\) is a set of conditional probabilities.

- \(p_\mathrm{SM}(y \mid \mathbf{y})\) where \(\mathbf{y} \in \Sigma^*\) is the history.

- SM can be also over \(\bar{\Sigma}\).

- Definition: Locally normalized language model is a sequence model over \(\bar{\Sigma}\) such that: \[p_\mathrm{LM}(\mathbf{y}) = p_\mathrm{SM}(\mathrm{EOS} \mid \mathbf{y}) \prod_{i=1}^{T} p_\mathrm{SM}(y_i \mid y_{<i}),\] for all \(\mathbf{y} \in \Sigma^*\), with \(|\mathbf{y}| = T\).

- The model is tight if \(1 = \sum_{\mathbf{y} \in \Sigma^*} p_\mathrm{LM}(\mathbf{y})\).

- So again, an LN LM isn’t necessarily an LM; it’s about how we can write it.

- When can an LM be locally normalized?

- Answer: Every model can be locally normalized.

- Definition: Prefix probability. \(\pi(\mathbf{y}) = \sum_{\mathbf{y}' \in \Sigma^*} p_\mathrm{LM}(\mathbf{y} \mathbf{y}')\).

- Probability that \(\mathbf{y}\) is a prefix of any string in the language, the cumulative probability of all strings that start with that prefix.

- Theorem: Let \(p_\mathrm{LM}\) be a language model, then there exists an LN model such that for all \(\mathbf{y} \in \Sigma^*\), \(p_\mathrm{LM} = p_\mathrm{LN}(\mathbf{y}) = p_\mathrm{SM}(\mathrm{EOS} \mid \mathbf{y}) \prod_{i=1}^{T} p_\mathrm{SM}(y_i \mid y_{<i})\).

- Sketch of proof: We define \(p_\mathrm{SM}(y \mid \mathbf{y}) = \frac{\pi(\mathbf{y} y)}{\pi(\mathbf{y})}\) and \(p_\mathrm{SM}(\mathrm{EOS} \mid \mathbf{y}) = \frac{p_\mathrm{LM}(\mathbf{y})}{\pi(\mathbf{y})}\).

- When we then write \(p_\mathrm{LN}(\mathbf{y})\) for \(\pi(\mathbf{y}) > 0\), we get that it is the same as \(p_\mathrm{LM}\).

- And for \(\pi(\mathbf{y}) = 0\), we have \(p_\mathrm{LN}(\mathbf{y}) = 0\), so it’s well-defined.

- LNMs are very common in natural language processing (NLP) even though they are not necessarily language models.

Tight Language Models

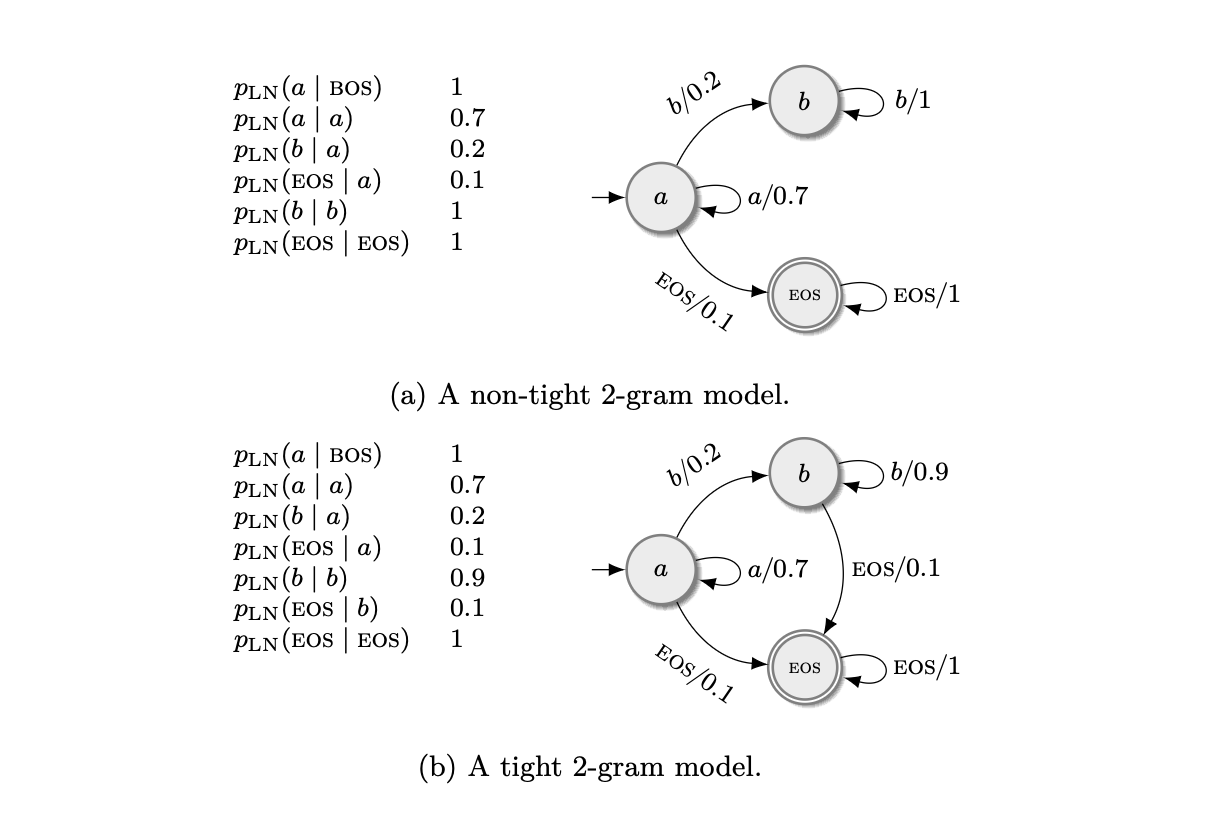

- Locally normalized language models can be non-tight, leaking probability mass to infinite sequences.

- The sum over all sequences of the LN model may not sum to one, but each conditional of \(p_\mathrm{SM}\) is normalized.

- Tight is sometimes called consistent or proper.

- In the example (a) this happens since the only sequences that end with EOS are those that don’t produce \(b\) (description of non-tight 2-gram model where sum = 1/3 <1).

- It is related to the fact that the probability of EOS is 0 for some sequences, but making \(p(\mathrm{EOS} \mid y_{<i}) > 0\) isn’t sufficient nor necessary.

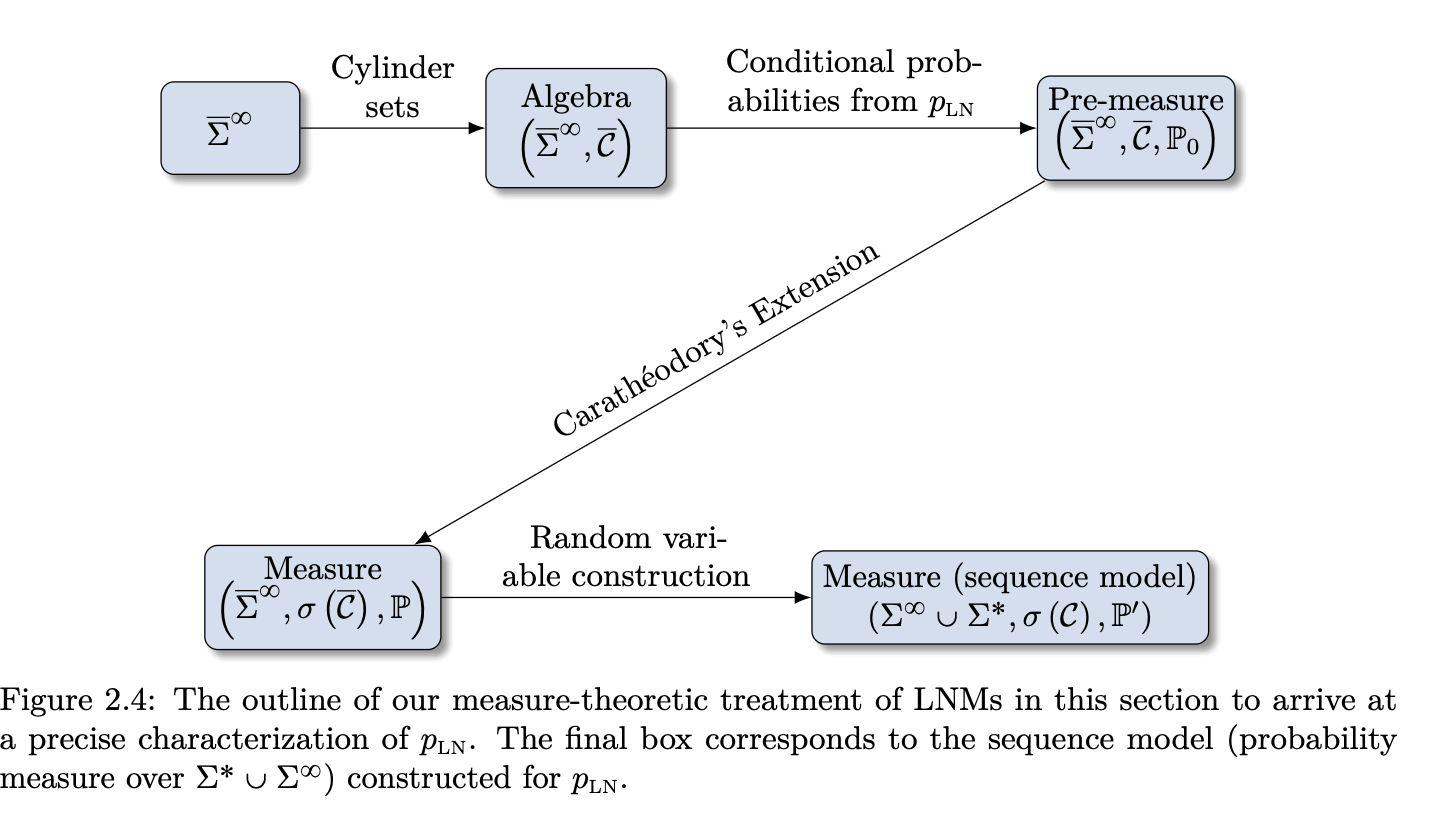

Defining the Probability Measure of an LNM

- We want to investigate what \(p_\mathrm{LN}\) is when it is not an LM.

- We have to be careful since the set of infinite sequences is uncountable.

- We can define them as probability measures over the union of the set of finite and infinite sequences.

- Definition: Sequence model is a probability space over \(\Sigma^* \cup \Sigma^\infty\).

- Definition: Language model is a sequence model over \(\Sigma^*\) or an SM which \(\mathbb{P}(\Sigma^\infty) = 0\).

Defining an Algebra over \(\bar{\Sigma}^\infty\) (Step 1)

- An LNM produces conditionals over the augmented alphabet \(\bar{\Sigma}\).

- We will construct a sigma-algebra over \(\bar{\Sigma}^\infty\) (includes both finite and infinite sequences with EOS multiple times).

- Then, based on \(p_\mathrm{LN}\), we will find its pre-measure that is consistent with \(p_\mathrm{LN}\).

- Definition: Cylinder set of rank \(k\), set of infinite strings that share their \(k\)-prefix where \(\mathcal{H} \subseteq \bar{\Sigma}^k\). \[\bar{C}(\mathcal{H}) \stackrel{\mathrm{def}}{=} \{\mathbf{y} \boldsymbol{\omega} : \mathbf{y} \in \mathcal{H}, \boldsymbol{\omega} \in \bar{\Sigma}^\infty\}.\]

- Collection of all rank \(k\) cylinder sets is denoted as \(\mathcal{C}_k = \{\bar{C}(\mathcal{H}) \mid \mathcal{H} \in \mathcal{P}(\bar{\Sigma}^k)\}\).

- This is a set of sets, so the elements of this set are cylinder sets.

- This sequence is increasing, so \(\mathcal{C}_1 \subseteq \mathcal{C}_2 \subseteq \ldots\).

- Definition: The collection of all cylinder sets on \(\Omega\) is \(\mathcal{C} = \bigcup_{k=1}^{\infty} \mathcal{C}_k\).

- This is an algebra over \(\bar{\Sigma}^\infty\).

Defining a Pre-measure (Step 2)

- We are constructing a pre-measure \(\mathbb{P}_0\) for the cylinder set \(\bar{C}\) given an LNM \(p_\mathrm{LN}\), and any cylinder \(\bar{C}(\mathcal{H}) \in \mathcal{C}\). \[\mathbb{P}_0(\bar{C}(\mathcal{H})) \stackrel{\mathrm{def}}{=} \sum_{\bar{\mathbf{y}} \in \mathcal{H}} p_\mathrm{LN}(\bar{\mathbf{y}}).\]

- We measure this cylinder by summing over all the finite sequences that generate it.

- Where \(p_\mathrm{LN}(\bar{\mathbf{y}}) = \prod_{i=1}^{T} p_\mathrm{LN}(\bar{y}_i \mid \bar{\mathbf{y}}_{<i})\).

- The same cylinder set can have different generator sets, so we have to prove that the function will have the same value for all of them.

- The proofs are skipped; this is just an outline.

Extending Pre-measure into a Measure (Step 3)

- For this, we will use the Carathéodory extension theorem.

- Theorem: Given an algebra \(\mathcal{A}\) over a set \(\Omega\), if \(\mathbb{P}_0\) is a pre-measure on \(\mathcal{A}\), then there exists a probability space \((\Omega, \mathcal{F}, \mathbb{P})\) such that \(\mathcal{A} \subseteq \mathcal{F}\) and \(\mathbb{P}|_{\mathcal{A}} = \mathbb{P}_0\). Furthermore, the sigma-algebra \(\mathcal{F}\) depends only on \(\mathcal{A}\) and is minimal and unique, which we denote \(\sigma(\mathcal{A})\), and the probability measure is unique.

- Using this, we get the space \((\bar{\Sigma}^\infty, \sigma(\mathcal{C}), \mathbb{P})\).

Defining a Sequence Model (Step 4)

- We have created this measure on the extended alphabet, which allows us to measure sequences that contain EOS multiple times.

- We can define a random variable (RV) to map into EOS-free sequences.

- RV is a measurable function between two sigma-algebras.

- We want to map to outcome space \(\Sigma^\infty \cup \Sigma^*\).

- We can do this in the same way as before using cylinder sets where we use prefixes from \(\Sigma^*\). \[C(\mathcal{H}) \stackrel{\mathrm{def}}{=} \{\mathbf{y} \boldsymbol{\omega} : \mathbf{y} \in \mathcal{H}, \boldsymbol{\omega} \in \Sigma^* \cup \Sigma^{\infty}\}.\]

- Notice that now the elements of the cylinder can be also finite.

- We can define the RV \[\mathrm{x}: (\bar{\Sigma}^{\infty}, \sigma(\bar{\mathcal{C}})) \rightarrow (\Sigma^* \cup \Sigma^{\infty}, \sigma(\mathcal{C})),\] which is a measurable mapping \[\mathrm{x}(\boldsymbol{\omega})= \begin{cases} \boldsymbol{\omega}_{<k} & \text{if $k$ is the first EOS in $\boldsymbol{\omega}$}, \\ \boldsymbol{\omega} & \text{otherwise (if EOS $\notin \boldsymbol{\omega}$)}. \end{cases}\]

- So this mapping takes an infinite sequence containing EOS and cuts it before the first occurrence, or returns the whole sequence if EOS is not present.

- So this defines a probability space \((\Sigma^* \cup \Sigma^\infty, \sigma(\mathcal{C}), \mathbb{P}_*)\).

- We have done it!! Given any LNM, we can construct an associated SM, a probability space over \(\Sigma^* \cup \Sigma^\infty\) where probabilities assigned to finite sequences are the same as in the LNM.

Interpreting the Constructed Probability Space

- For finite \(\mathbf{y} \in \Sigma^*\), \(\mathbb{P}_*(\mathbf{y}) = p_\mathrm{LN}(\mathrm{EOS} \mid \mathbf{y}) p_\mathrm{LN}(\mathbf{y})\).

- \(\mathbb{P}_*(\Sigma^\infty)\) is the probability of generating an infinite string.

- Singletons \(\{\mathbf{y}\}\) and \(\Sigma^\infty\) are measurable.

- Proposition: A sequence model is tight iff \(\sum_{\mathbf{y} \in \Sigma^*} \mathbb{P}_*(\{\mathbf{y}\}) = 1\).

- EOS probability emerges as conditional probability of exact string given prefix.

\[ \begin{aligned} p_{\mathrm{LN}}(\mathrm{EOS} \mid \boldsymbol{y}) & =\frac{\mathbb{P}_*(\mathrm{x}=\boldsymbol{y})}{p_{\mathrm{LN}}(\boldsymbol{y})} \\ & =\frac{\mathbb{P}_*(\mathrm{x}=\boldsymbol{y})}{\mathbb{P}_*(\mathrm{x} \in \mathcal{C}(\boldsymbol{y}))} \\ & =\mathbb{P}_*(\mathrm{x}=\boldsymbol{y} \mid \mathrm{x} \in \mathcal{C}(\boldsymbol{y})) \end{aligned} \]

Characterizing Tightness

Event \(A_k = \{\boldsymbol{\omega} \in \bar{\Sigma}^\infty : \omega_k = \mathrm{EOS}\}\).

Infinite strings: \(\bigcap_{k=1}^\infty A_k^c\).

Tight if \(\mathbb{P}(\bigcap_{k=1}^\infty A_k^c) = 0\).

Define \(\tilde{p}_\mathrm{EOS}(t) = \mathbb{P}(A_t \mid \bigcap_{m=1}^{t-1} A_m^c) = \frac{\sum_{\boldsymbol{\omega} \in \Sigma^{t-1}} p_\mathrm{LN}(\boldsymbol{\omega}) p_\mathrm{LN}(\mathrm{EOS} \mid \boldsymbol{\omega})}{\sum_{\boldsymbol{\omega} \in \Sigma^{t-1}} p_\mathrm{LN}(\boldsymbol{\omega})}\).

Theorem (sufficient and necessary condition for tightness): An LNM is tight iff \(\tilde{p}_\mathrm{EOS}(t) = 1\) for some \(t\) or \(\sum_{t=1}^\infty \tilde{p}_\mathrm{EOS}(t) = \infty\).

- Proof: Uses product of conditionals equaling intersection probability; applies Corollary from Borel-Cantelli for series divergence.

Borel-Cantelli Lemmas:

- I: If \(\sum \mathbb{P}(A_n) < \infty\), then \(\mathbb{P}(A_n\) i.o.) = 0.

- II: If independent and \(\sum \mathbb{P}(A_n) = \infty\), then \(\mathbb{P}(A_n\) i.o.) = 1.

- where i.o. means infinitely often which is the same as \(\bigcap_{n=1}^\infty \bigcup_{k=n}^\infty A_k\).

Corollary: \(\prod_{n=1}^\infty (1 - p_n) = 0 \iff \sum_{n=1}^\infty p_n = \infty\).

Theorem (sufficient condition for tightness): If \(p_\mathrm{LN}(\mathrm{EOS} \mid \mathbf{y}) \geq f(t)\) for all \(\mathbf{y} \in \Sigma^t\) and \(\sum_{t=1}^\infty f(t) = \infty\), then tight.

Lower bound via conditional probabilities summing to infinity implies zero mass on infinite intersection. In other words, if the probability of EOS given a prefix is bounded from below by a function that diverges, then the model is tight.

\(\tilde{p}_\mathrm{EOS}(t)\) often intractable due to partition function and therefore the lower bound is used in practice.

Modeling Foundations

- The decisions when we want to build a distribution over strings and estimate its parameters.

- How can a sequence model be parameterized?

- \(p_{\omega}: \Sigma^* \rightarrow \mathbb{R}\), where \(\Sigma^*\) denotes the Kleene closure of the alphabet \(\Sigma\).

- Where parameters \(\omega \in \Theta\) are free parameters of the function.

- Given a parameterized model, how can the parameters be chosen to best reflect the data?

Representation-Based Language Models

- Most modern language models are defined as locally normalized (LN) models.

- However, we first define a sequence model and prove that a specific parameterization encodes a tight LN model.

- We parameterize to enable optimization with respect to some objective.

- One way is to embed individual symbols and possible contexts into a Hilbert space, enabling a notion of similarity.

- Principle: Good representation principle: The success of a machine learning (ML) task depends on chosen representations; in our case, representations of symbols and contexts.

- Definition: Vector space over a field \(\mathbb{F}\): A set \(V\) with two operations—addition and scalar multiplication—satisfying 8 axioms (associativity, commutativity, identity element of addition, inverse elements of addition, compatibility of scalar multiplication with field multiplication, identity element of scalar multiplication, distributivity of scalar multiplication with respect to vector addition, and distributivity of scalar multiplication with respect to field addition).

- A \(D\)-dimensional vector space has \(D\) basis vectors, representable as a \(D\)-dimensional vector in \(\mathbb{F}^D\).

- An inner product is defined as a bilinear form \(\langle \cdot, \cdot \rangle: V \times V \rightarrow \mathbb{F}\) satisfying (conjugate) symmetry, linearity in the first argument, and positive-definiteness.

- The inner product induces a norm \(\|\cdot\|: V \rightarrow \mathbb{R}_{\geq 0}\) defined as \(\|v\| = \sqrt{\langle v, v \rangle}\).

- Hilbert space is a complete inner product space, meaning every Cauchy sequence (or every absolutely convergent sequence in the norm) converges to a limit in the space.

- An inner product space can be completed into a Hilbert space.

- We need a Hilbert space because infinite sequences (e.g., generated by a recurrent neural network, RNN) must lie in the space; e.g., using rationals as the field would not represent all sequences (limit might be irrational like \(\sqrt{2}\)).

- Representation function from a set \(\mathcal{S}\) to a Hilbert space \(V\) is a function mapping elements to their representations.

- If \(\mathcal{S}\) is finite, it can be represented as a matrix \(E \in \mathbb{R}^{|\mathcal{S}| \times D}\), where each row is the representation of a symbol.

- This is the case for embedding symbols from the extended alphabet \(\bar{\Sigma} = \Sigma \cup \{\mathrm{bos}, \mathrm{eos}\}\) via an embedding function \(e: \bar{\Sigma} \rightarrow \mathbb{R}^D\).

- E.g., one-hot encoding assigns each symbol its \(n\)th basis vector (matrix is identity-like); it’s sparse, and cosine similarity is 0 for non-identical symbols.

- We want to capture semantic information.

- For contexts, define an encoding function \(\mathrm{enc}: \bar{\Sigma}^* \rightarrow \mathbb{R}^D\) mapping the context to a vector in the same space.

- For now, treat the function as a black box.

Compatibility of Symbol and Context

- \(\cos(\theta) = \frac{\langle e(y), \mathrm{enc}(\boldsymbol{y}) \rangle}{\|e(y)\| \|\mathrm{enc}(\boldsymbol{y})\|}\).

- Traditionally, use the inner product as the compatibility function.

- For a symbol and context: \(\langle e(y), \mathrm{enc}(\boldsymbol{y}) \rangle\).

- All compatibilities via matrix multiplication: \(E \cdot \mathrm{enc}(\boldsymbol{y})\).

- Entries are often called scores or logits.

- To formulate \(p_{\mathrm{SM}}\), project the scores into a probability simplex.

- Definition: Probability simplex \(\Delta^{D-1} = \{\mathbf{x} \in \mathbb{R}^D \mid x_d \geq 0, \sum_{d=1}^D x_d = 1\}\).

- \(p_{\mathrm{SM}}: V \rightarrow \Delta^{|\bar{\Sigma}|-1}\).

- Need a projection function from logits to the simplex.

- Requires monotonicity, positivity everywhere, and summing to 1.

- Definition: Softmax function:

- \(\mathrm{softmax}: \mathbb{R}^D \rightarrow \Delta^{D-1}\).

- \(\mathrm{softmax}(\mathbf{x})_d = \frac{\exp(x_d / \varepsilon)}{\sum_{j=1}^D \exp(x_j / \varepsilon)}\), where \(\varepsilon > 0\) is the temperature.

- Temperature \(\varepsilon\) controls entropy by scaling logits; in the Boltzmann distribution context, it relates to randomness.

- High \(\varepsilon\): More uniform distribution; low \(\varepsilon\): More peaked; limit as \(\varepsilon \to 0^+\): Argmax (one-hot at maximum).

- Variational characterization: \[ \mathrm{softmax}(\mathbf{x}) = \underset{\mathbf{p} \in \Delta^{D-1}}{\mathrm{argmax}} \left( \mathbf{p}^\top \mathbf{x} - \varepsilon \sum_{d=1}^D p_d \log p_d \right) = \underset{\mathbf{p} \in \Delta^{D-1}}{\mathrm{argmax}} \left( \mathbf{p}^\top \mathbf{x} + \varepsilon H(\mathbf{p}) \right), \] where \(H(\mathbf{p}) = -\sum_d p_d \log p_d\) is entropy.

- Interpreted as the vector with maximal similarity to \(\mathbf{x}\) while regularized for high entropy.

- Non-sparse (zeros only if input is \(-\infty\)).

- Invariant to constant addition: \(\mathrm{softmax}(\mathbf{x} + c \mathbf{1}) = \mathrm{softmax}(\mathbf{x})\).

- Derivative is computable and function is smooth.

- Maintains ordering for all temperatures: If \(x_i > x_j\), then \(\mathrm{softmax}(\mathbf{x})_i > \mathrm{softmax}(\mathbf{x})_j\).

- Other projections like sparsemax: \(\mathrm{sparsemax}(\mathbf{x}) = \underset{\mathbf{p} \in \Delta^{D-1}}{\mathrm{argmin}} \|\mathbf{p} - \mathbf{x}\|_2^2\) (can be sparse, but not everywhere differentiable).

Representation-Based Locally Normalized Language Model

- Definition: \[ p_{\mathrm{SM}}(y_t \mid \boldsymbol{y}_{<t}) \stackrel{\text{def}}{=} f_{\Delta^{|\bar{\Sigma}|-1}} \left( E \cdot \mathrm{enc}(\boldsymbol{y}_{<t}) \right)_{y_t}, \] where \(f\) is typically softmax.

- Defines probability of whole string: \(p_{\mathrm{LN}}(\boldsymbol{y}) = p_{\mathrm{SM}}(\mathrm{eos} \mid \boldsymbol{y}) \prod_{t=1}^T p_{\mathrm{SM}}(y_t \mid \boldsymbol{y}_{<t})\), with \(\boldsymbol{y}_0 = \mathrm{bos}\).

- Encoding functions carry all information about symbol likelihoods given contexts.

- Theorem: Let \(p_{\mathrm{SM}}\) be a representation-based sequence model over alphabet \(\Sigma\).

- \(s \stackrel{\text{def}}{=} \sup_{y \in \Sigma} \|e(y) - e(\mathrm{eos})\|_2\) (largest distance to eos representation).

- \(z_{\max}^t = \max_{\boldsymbol{y} \in \Sigma^t} \|\mathrm{enc}(\boldsymbol{y})\|_2\) (maximum context norm for length \(t\)).

- LN model induced by \(p_{\mathrm{SM}}\) is tight if \(s z_{\max}^t \leq \log t\).

- Theorem: Representation-based LN models with uniformly bounded \(\|\mathrm{enc}(\boldsymbol{y})\|_p\) for some \(p \geq 1\) are tight.

- Often holds in practice due to bounded activation functions, ensuring softmax assigns positive probability to eos.

Estimating a Language Model

- \(D\) is a collection of \(N\) i.i.d. strings from \(\Sigma^*\) generated according to the unknown \(p_{\mathrm{LM}}\) distribution.

- This is often posed as an optimization problem or search for the best parameters; therefore, we must limit the space of possible models.

- We limit ourselves to a parameterized family of models \(\{p_{\omega} \mid \omega \in \Theta\}\), where \(\Theta\) is the parameter space.

- General Framework

- We search for parameters \(\hat{\omega}\) that maximize a chosen objective, or alternatively, minimize some loss function \(\varsigma: \Theta \times \Theta \rightarrow \mathbb{R}_{\geq 0}\).

- We seek the model \(\hat{\omega} = \arg\min_{\omega \in \Theta} \varsigma(\omega^*, \omega)\).

- We don’t know \(\omega^*\) but can estimate it using the empirical distribution from the corpus pointwise.

- Definition: \(\hat{p}_{\omega^*}(\boldsymbol{y}) = \frac{1}{N} \sum_{n=1}^N \delta_{\boldsymbol{y}^{(n)}}(\boldsymbol{y})\).

- This can also be decomposed on the level of the symbols.

- We focus on the generative paradigm where we model the distribution not just boundaries between classes; this is usually the case for language models where we use unannotated data with self-supervised learning.

- We can now measure the distance between these distributions using cross-entropy, which has its roots in information theory.

- \(\varsigma(\omega^*, \omega) = H(\hat{p}_{\omega^*}, p_{\omega})\).

- In general, cross-entropy is defined as \(H(p_1, p_2) = -\sum_{\boldsymbol{y} \in \mathcal{Y}} p_1(\boldsymbol{y}) \log p_2(\boldsymbol{y})\), where the sum is over the support \(\mathcal{Y}\) of \(p_1\).

- Usually written in the LN form: \(H(p_1, p_2) = -\sum_{\boldsymbol{y} \in \mathcal{Y}} \sum_{t=1}^T p_1(y_t \mid \boldsymbol{y}_{<t}) \log p_2(y_t \mid \boldsymbol{y}_{<t})\).

- CE is not symmetric; CE between two distributions is the average number of bits needed to encode samples from \(p_1\) using a code optimized for \(p_2\).

- The optimal encoding for \(p_1\) uses \(-\log p_1(y)\) bits to encode an event with prob \(p_1(y)\), so the minimal value for CE is when \(p_1 = p_2\).

- Related to KL divergence, which is defined as \(D_{\mathrm{KL}}(p_1 \| p_2) = H(p_1, p_2) - H(p_1, p_1)\), which could be used as a loss with the same results since the entropy term doesn’t depend on the parameters.

- Relationship to MLE

- Data likelihood \(\mathcal{L}(\omega) = \prod_{n=1}^N p_{\omega}(\boldsymbol{y}^{(n)})\).

- Principle: The optimal parameters are those that maximize the likelihood of the data observed.

- We usually work with log-likelihood, since it’s convex and numerically more stable.

- The optimal parameters found using MLE are the same as those found using minimizing the cross-entropy loss with empirical distribution.

- These multiple perspectives show us that we want to be close w.r.t. a given metric and place probability mass on the observed data.

- Theorem: MLE is consistent: Consider loss \(\varsigma(\omega^*, \omega) = H(\hat{p}_{\omega^*}, p_{\omega})\) and that data is i.i.d. generated from the language model. Under regularity conditions for the parametric family and that the minimizer is unique, the estimator \(\hat{\omega}\) is a consistent estimator, i.e., it converges in probability as \(N \rightarrow \infty\) to the true parameters \(\omega^*\).

- Up to here we assumed that the optimal model lives in the parametric space, which in practice is not the case; we can still prove relationship between the true model and its projection onto the parametric space being close to the estimated model.

- Problems with CE: The model has to place mass on all strings in \(\Sigma^*\), to avoid infinite loss; this is called mean-seeking behavior.

- It is unclear whether this is desirable or not.

- Teacher forcing: We have only considered loss functions that condition on the previous symbol, like a time series, and even if the model predicts wrong, we condition in the next step on the ground truth; training with CE mandates this, but this can lead to bad performance if we score the model’s prediction on a whole sequence; this is called exposure bias. On the other hand, using previous predictions can lead to training instability.

- Masked Language Modeling

- Sequential conditioning useful for generation.

- But seeing both the previous and next symbol is useful for classification.

- Sometimes called pseudo-log-likelihood. \[ \ell_{\mathrm{MLM}}(\omega)=\sum_{n=1}^N\sum_{t=1}^T\log p_\omega(y_t^{(n)}\mid\boldsymbol{y}_{<t}^{(n)},\boldsymbol{y}_{>t}^{(n)}). \]

- BERT (2019) best known masked language model.

- They are not LMs in the strict sense since they don’t model a valid distribution over \(\Sigma^*\).

- Popular for language tasks and fine-tuning.

- Other Divergence Measures

- There has been work on using other divergence measures that can better capture the tails of the distributions since language has long tail, in contrast to mean-seeking behavior of CE.

- There are computational issues with other measures.

- For example, we can estimate the forward KL using MC only using the samples from the data but we don’t have access to the true distribution.

- Scheduled Sampling (Bengio 2015)

- After initial period, some part of predictions is conditioned on the previous prediction, rather than the ground truth.

- This is done to avoid exposure bias.

- The estimator no longer consistent.

- These and other methods aim to specify an alternative distribution of high-quality texts rather than average texts and use RL methods like the REINFORCE algorithm to optimize the model.

- There can be also other auxiliary tasks that are intertwined in the training like next sentence prediction, frequency prediction, sentence order prediction, etc.; these methods usually lead to invalidate the formal requirements for a true LM.

Parameter Estimation

- Finding best parameters analytically or manually is not possible.

- We have to resort to numerical optimization.

- To have an unbiased estimate of the model performance we have to split the data into training, validation, and test set.

- Most are gradient methods.

- Backprop = reverse-mode autodiff.

- We can compute gradients with the same complexity as the forward pass.

- Normal GD is over the whole dataset, but this is not feasible for large datasets.

- Usually we use SGD or mini-batch SGD to estimate the gradients but trade off higher variance.

- Curriculum learning: Start with easy training examples and gradually increase the difficulty.

- Usually ADAM that uses estimates of the first and second moments of the gradients; this is often used in practice.

- Parameter initialization is very important, can result in bad local minima or slow convergence; Xavier or He for ReLU.

- Early stopping: Stop training when the validation loss stops improving; this is to avoid overfitting, though recently people observe double descent or grokking which suggests learning longer may be beneficial in specific cases.

- Regularization: Is a technique which modifies the training with the intention of improving generalization performance perhaps at the cost of training performance, aka making the training harder in some way.

- Most common in language models:

- Weight decay: Sometimes called L2 regularization, penalizes large weights by adding a term to the loss function that is proportional to the square of the magnitude of the weights.

- Entropy regularization: Principle of maximum entropy: Best prior is the one that is least informative; label smoothing and confidence penalty add terms to the loss that penalize peaky distributions.

- Dropout: Randomly sets a fraction of the activations to zero during training; this avoids overly relying on a specific signal and also can be viewed as an ensemble method.

- Batch and layer norm:

- Batch norm: Normalizes the activations of a layer across the batch; this helps with convergence and stability.

- Layer norm: Normalizes the activations across the features; this is often used in transformer models.

Classical Language Models

This chapter introduces classical language modeling frameworks, focusing on finite-state language models (a generalization of n-gram models). These older approaches distill key concepts due to their simplicity and serve as baselines for modern neural architectures discussed in Chapter 5. Key questions: How can we tractably represent conditional distributions \(p_{SM}(y \mid \mathbf{y})\)? How can we represent hierarchical structures in language?

Finite-State Language Models

- Finite-state language models generalize n-gram models and are based on finite-state automata (FSA), which distinguish a finite number of contexts when modeling conditional distributions \(p_M(y \mid \mathbf{y})\).

- Informal Definition: A language model \(p_{LM}\) is finite-state if it defines only finitely many unique conditional distributions \(p_{LM}(y \mid \mathbf{y})\).

- This bounds the number of distributions to learn but is insufficient for human language; still useful as baselines and theoretical tools.

Finite-State Automata (FSA)

- Definition: An FSA is a 5-tuple \((\Sigma, Q, I, F, \delta)\), where:

- \(\Sigma\) is the alphabet.

- \(Q\) is a finite set of states.

- \(I \subseteq Q\) is the set of initial states.

- \(F \subseteq Q\) is the set of final states.

- \(\delta \subseteq Q \times (\Sigma \cup \{\epsilon\}) \times Q\) is a finite multiset of transitions (notation: \(q_1 \xrightarrow{a} q_2\) for \((q_1, a, q_2) \in \delta\); multiset allows duplicates).

- Graphically: Labeled directed multigraph; vertices = states; edges = transitions; initial states marked by incoming arrow; final states by double circle.

- FSA reads input string \(y \in \Sigma^*\) sequentially, transitioning per \(\delta\); starts in \(I\); \(\epsilon\)-transitions consume no input.

- Non-determinism: Multiple transitions possible; take all in parallel.

- Deterministic FSA: No \(\epsilon\)-transitions; at most one transition per \((q, a) \in Q \times \Sigma\); single initial state.

- Deterministic and non-deterministic FSAs are equivalent (can convert between them).

- Accepts \(y\) if ends in \(F\) after reading \(y\).

- Language: \(L(\mathcal{A}) = \{ y \mid y\) recognized by \(\mathcal{A} \}\).

- Regular Language: \(L \subseteq \Sigma^*\) is regular iff recognized by some FSA.

Weighted Finite-State Automata (WFSA)

- Augment FSA with real-valued weights.

- Definition: WFSA \(\mathcal{A} = (\Sigma, Q, \delta, \lambda, \rho)\), where:

- \(\delta \subseteq Q \times (\Sigma \cup \{\epsilon\}) \times \mathbb{R} \times Q\) (notation: \(q \xrightarrow{a/w} q'\)).

- \(\lambda: Q \to \mathbb{R}\) (initial weights).

- \(\rho: Q \to \mathbb{R}\) (final weights).

- Implicit initials: \(\{q \mid \lambda(q) \neq 0\}\); finals: \(\{q \mid \rho(q) \neq 0\}\).

- Transition Matrix: \(T(a)_{ij} =\) sum of weights from \(i\) to \(j\) labeled \(a\); \(T = \sum_{a \in \Sigma} T(a)\).

- Path: Sequence of consecutive transitions; \(|\pi| =\) length; \(p(\pi), n(\pi) =\) origin/destination; \(s(\pi) =\) yield.

- Path sets: \(\Pi(\mathcal{A}) =\) all paths; \(\Pi(\mathcal{A}, y) =\) yielding \(y\); \(\Pi(\mathcal{A}, q, q') =\) from \(q\) to \(q'\).

- Path Weight: Inner \(w_I(\pi) = \prod_{n=1}^N w_n\) for \(\pi = q_1 \xrightarrow{a_1/w_1} q_2 \cdots q_N\); full \(w(\pi) = \lambda(p(\pi)) \cdot w_I(\pi) \cdot \rho(n(\pi))\).

- Accepting: \(w(\pi) \neq 0\).

- Stringsum: \(\mathcal{A}(y) = \sum_{\pi \in \Pi(\mathcal{A}, y)} w(\pi)\).

- Weighted Language: \(L(\mathcal{A}) = \{(y, \mathcal{A}(y)) \mid y \in \Sigma^*\}\); weighted regular if from WFSA.

- State Allsum (backward value \(\beta(q)\)): \(Z(\mathcal{A}, q) = \sum_{\pi: p(\pi)=q} w_I(\pi) \cdot \rho(n(\pi))\).

- Allsum: \(Z(\mathcal{A}) = \sum_y \mathcal{A}(y) = \sum_\pi w(\pi)\); normalizable if finite.

- Accessibility: \(q\) accessible if non-zero path from initial; co-accessible if to final; useful if both.

- Trim: Remove useless states (preserves non-zero weights).

- Probabilistic WFSA (PFSA): \(\sum_q \lambda(q)=1\); weights \(\geq 0\); for each \(q\), \(\sum w + \rho(q)=1\) over outgoing and final.

- Final weights replace EOS; corresponds to locally normalized models.

Finite-State Language Models (FSLM)

- Definition: \(p_{LM}\) finite-state if \(L(p_{LM}) = L(\mathcal{A})\) for some WFSA (weighted regular language).

- Path/String Prob in PFSA: Path prob = weight; string prob \(p_{\mathcal{A}}(y) = \mathcal{A}(y)\) (local; may not be tight).

- String Prob in WFSA: \(p_{\mathcal{A}}(y) = \mathcal{A}(y) / Z(\mathcal{A})\) (global, tight if normalizable, non-negative).

- Induced LM: \(p_{LM^{\mathcal{A}}}(y) = p_{\mathcal{A}}(y)\).

Normalizing Finite-State Language Models

- Pairwise pathsums: \(M_{ij} = \sum_{\pi: i \to j} w_I(\pi)\); \(Z(\mathcal{A}) = \vec{\lambda} M \vec{\rho}\).

- Proof: Group paths by origin/destination; factor \(\lambda(i), \rho(j)\).

- \(T^d_{ij} = \sum w_I(\pi)\) over length-\(d\) paths \(i \to j\) (induction).

- \(T^* = \sum_{d=0}^\infty T^d = (I - T)^{-1}\) if converges; \(M = T^*\).

- Runtime \(O(|Q|^3)\); Lehmann’s algorithm special case.

- Converges iff spectral radius \(\rho_s(T) < 1\) (eigenvalues \(<1\) in magnitude).

- Speed-ups: Acyclic = linear in transitions (Viterbi); sparse components combine algorithms.

- Differentiable for gradient-based training.

Locally Normalizing a Globally Normalized Finite-State Language Model

- Instance of weight pushing; computes state allsums, reweights transitions.

- Theorem: Normalizable non-negative WFSA and tight PFSA equally expressive.

- Proof (\(\Leftarrow\)): Trivial (tight PFSA is WFSA with \(Z=1\)).

- (\(\Rightarrow\)): For trim \(\mathcal{A}_G\), construct \(\mathcal{A}_L\) with same structure; reweight initials \(\lambda_L(q) = \lambda(q) Z(\mathcal{A}, q) / Z(\mathcal{A})\); finals \(\rho_L(q) = \rho(q) / Z(\mathcal{A}, q)\); transitions \(w_L = w \cdot Z(\mathcal{A}, q') / Z(\mathcal{A}, q)\).

- Non-negative; sum to 1 (by allsum recursion lemma).

- Path probs match: Internal allsums cancel; leaves \(w(\pi)/Z(\mathcal{A})\).

- Lemma (Allsum Recursion): \(Z(\mathcal{A}, q) = \sum_{q \xrightarrow{a/w} q'} w \cdot Z(\mathcal{A}, q') + \rho(q)\).

- Proof: Partition paths by first transition; recurse on tails.

Defining a Parametrized Globally Normalized Language Model

- Parametrize transitions via score \(f_\theta^\delta: Q \times \Sigma \times Q \to \mathbb{R}\); initials \(f_\theta^\lambda: Q \to \mathbb{R}\); finals \(f_\theta^\rho: Q \to \mathbb{R}\).

- Defines \(\mathcal{A}_\theta\); prob \(p(y) = \mathcal{A}_\theta(y) / Z(\mathcal{A}_\theta)\); energy \(E(y) = -\log \mathcal{A}_\theta(y)\) for GN form.

- \(f_\theta\) can be neural net/lookup; states encode context (e.g., n-grams).

- Trainable via differentiable allsum/stringsum.

Tightness of Finite-State Models

- Globally normalized finite-state language models are tight by definition, as probabilities sum to 1 over all finite strings.

- Focus on locally normalized finite-state models, which are exactly probabilistic weighted finite-state automata (PFSA).

- Theorem: A PFSA is tight if and only if all accessible states are also co-accessible.

- Proof (\(\Rightarrow\)): Assume tight. Let \(q \in Q\) be accessible (reachable with positive probability). By tightness, there must be positive probability path from \(q\) to termination; otherwise, non-tight after reaching \(q\). Thus, \(q\) co-accessible.

- (\(\Leftarrow\)): Assume all accessible states co-accessible. Consider Markov chain on accessible states \(Q_A \subseteq Q\) (others have probability 0). EOS is absorbing and reachable from all (by co-accessibility). Suppose another absorbing set distinct from EOS; it cannot reach EOS, contradicting co-accessibility. Thus, EOS unique absorbing state; process absorbed with probability 1. Hence, tight.

- Trimming a PFSA may violate sum=1 condition: \(\rho(q) + \sum_{q \xrightarrow{a/w} q'} w \leq 1\); such are substochastic WFSA.

- Definition (Substochastic WFSA): WFSA where \(\lambda(q) \geq 0\), \(\rho(q) \geq 0\), \(w \geq 0\), and for all \(q\), \(\rho(q) + \sum w \leq 1\).

- Theorem: Let \(T'\) be transition matrix of trimmed substochastic WFSA. Then \(I - T'\) invertible; termination probability \(p(\Sigma^*) = \vec{\lambda}' (I - T')^{-1} \vec{\rho}' \leq 1\).

- Spectral Radius: \(\rho_s(M) = \max \{|\lambda_i|\}\) over eigenvalues \(\lambda_i\).

- Lemma: \(\rho_s(T') < 1\).

- Proof: Assume Frobenius normal form \(T' =\) block upper triangular with irreducible blocks \(T'_k\). Each \(T'_k\) substochastic and strictly so (trimmed implies no probabilistic block without finals, contradicting co-accessibility). By Proposition 4.1.1, \(\rho_s(T'_k) < 1\) (row sums <1 or mixed < max row sum). Thus, \(\rho_s(T') = \max \rho_s(T'_k) < 1\).

- Proof: \(\rho_s(T') < 1\) implies \(I - T'\) invertible; Neumann series \((I - T')^{-1} = \sum_{k=0}^\infty (T')^k\) converges. Then \(p(\Sigma^*) = \sum_{k=0}^\infty P(\Sigma^k) = \sum_{k=0}^\infty \vec{\lambda}' (T')^k \vec{\rho}' = \vec{\lambda}' (I - T')^{-1} \vec{\rho}' \leq 1\) (substochastic).

The n-gram Assumption and Subregularity

- n-gram models are a special case of finite-state language models; all results apply.

- Modeling all conditional distributions \(p_{SM}(y_t \mid \mathbf{y}_{<t})\) is intractable (infinite histories as \(t \to \infty\)).

- Assumption (n-gram): Probability of \(y_t\) depends only on previous \(n-1\) symbols: \(p_{SM}(y_t \mid \mathbf{y}_{<t}) = p_{SM}(y_t \mid \mathbf{y}_{t-n+1}^{t-1})\).

- Alias: \((n-1)\)th-order Markov assumption in language modeling.

- Handle edges (\(t < n\)): Pad with BOS symbols, transforming \(\mathbf{y}_{1:t} \to\) BOS repeated \(n-1-t\) times + \(\mathbf{y}_{1:t}\); assume \(p_{SM}\) ignores extra BOS.

- Limits unique distributions to \(O(|\Sigma|^{n-1})\); dependencies span at most \(n\) tokens; parallelizable (e.g., like transformers).

- Historical significance: Shannon (1948), Baker (1975), Jelinek (1976), etc.; baselines for neural models.

- WFSA Representation of n-gram Models: n-gram LMs are finite-state (finite histories).

- Alphabet \(\Sigma\) (exclude EOS; use finals).

- States \(Q = \bigcup_{t=0}^{n-1} \{\text{BOS}\}^{n-1-t} \times \Sigma^t\).

- Transitions: From history \(\mathbf{y}_{t-n:t-1}\) to \(\mathbf{y}_{t-n+1:t}\) on \(y_t\) with weight \(p_{SM}(y_t \mid \mathbf{y}_{t-n:t-1})\).

- Initial: \(\lambda(\text{BOS}^{n-1}) = 1\), others 0.

- Final: \(\rho(\mathbf{y}) = p_{SM}(\text{EOS} \mid \mathbf{y})\).

- Proof of equivalence left to reader; models up to first EOS.

- Parametrized WFSA for n-gram: Parametrize scores for “suitability” of n-grams; globally normalize; fit via training (e.g., §3.2.3).

- Subregularity: Subregular languages recognized by FSA or weaker; hierarchies within regular languages.

- n-gram models are strictly local (SL), simplest non-finite subregular class (patterns on consecutive blocks independently).

- Definition (Strictly Local): Language \(L\) is \(SL_n\) if for every \(|\mathbf{y}|=n-1\), and all \(\mathbf{x}_1, \mathbf{x}_2, \mathbf{z}_1, \mathbf{z}_2\), if \(\mathbf{x}_1 \mathbf{y} \mathbf{z}_1 \in L\) and \(\mathbf{x}_2 \mathbf{y} \mathbf{z}_2 \in L\), then \(\mathbf{x}_1 \mathbf{y} \mathbf{z}_2 \in L\) (and symmetric). SL if \(SL_n\) for some n.

- Matches n-gram: History >n irrelevant.

Representation-Based n-gram Models

- Simple: Parametrize \(\theta = \{\zeta_{y'|\mathbf{y}} = p_{SM}(y' \mid \mathbf{y}) \mid |\mathbf{y}|=n-1, y' \in \Sigma\}\), non-negative, sum to 1 per context.

- MLE: \(\zeta_{y_n | \mathbf{y}_{<n}} = C(\mathbf{y}) / C(\mathbf{y}_{<n})\) if defined (\(C=\) corpus counts).

- Proof: Log-likelihood \(\ell(\mathcal{D}) = \sum_{|\mathbf{y}|=n} C(\mathbf{y}) \log \zeta_{y_n | \mathbf{y}_{<n}}\) (token-to-type switch); KKT yields solution (independent per context).

- Issues: No word similarity (e.g., “cat” unseen but similar to “kitten”, “puppy”); no generalization across paraphrases.

- Neural n-gram (Bengio et al., 2003): Use embeddings \(E \in \mathbb{R}^{|\Sigma| \times d}\); encoder \(\text{enc}(\mathbf{y}_{t-n+1}^{t-1}) = b + W x + U \tanh(d + H x)\), \(x =\) concat\((E[y_{t-1}], \dots, E[y_{t-n+1}])\).

- \(p_{SM}(y_t \mid \mathbf{y}_{<t}) = \text{softmax}( \text{enc}^T E + b )_{y_t}\); locally normalized model.

- Shares parameters via embeddings/encoder; generalizes semantically; parameters linear in \(n\) (vs. \(|\Sigma|^n\)).

- Still n-gram (finite context); WFSA-representable with neural-parametrized weights.

Pushdown Language Models

- Human language has unbounded recursion (e.g., center embeddings: “The cat the dog the mouse… likes to cuddle”), requiring infinite states; not regular.

- Context-free languages (CFL) capture hierarchies; generated by context-free grammars (CFG), recognized by pushdown automata (PDA).

- Competence vs. Performance: Theoretical grammar allows arbitrary depth; actual use limits nesting (rarely >3; Miller and Chomsky, 1963).

Context-Free Grammars (CFG)

- Definition: 4-tuple \(G = (\Sigma, N, S, P)\); \(\Sigma\) terminals; \(N\) non-terminals (\(N \cap \Sigma = \emptyset\)); \(S \in N\) start; \(P \subseteq N \times (N \cup \Sigma)^*\) productions (\(X \to \alpha\)).

- Rule Application: Replace \(X\) in \(\beta = \gamma X \delta\) with \(\alpha\) to get \(\gamma \alpha \delta\) if \(X \to \alpha \in P\).

- Derivation: Sequence \(\alpha_1, \dots, \alpha_M\) with \(\alpha_1 \in N\), \(\alpha_M \in \Sigma^*\), each from applying rule to previous.

- Derives: \(X \implies_G \beta\) if direct; \(X \overset{*}{\implies}_G \beta\) if transitive closure.

- Language: \(L(G) = \{ y \in \Sigma^* \mid S \overset{*}{\implies}_G y \}\).

- Parse Tree: Tree with root \(S\), internal nodes non-terminals, children per production RHS; yield \(s(d) =\) leaf concatenation.

- Derivation Set: \(D_G(y) = \{d \mid s(d)=y\}\); \(D_G =\) union over \(y \in L(G)\); \(D_G(Y) =\) subtrees rooted at \(Y\) (\(D_G(a)=\emptyset\) for terminal \(a\)).

- Ambiguity: Grammar ambiguous if some \(y\) has \(|D_G(y)| >1\); unambiguous if =1.

- Example: \(G\) for \(\{a^n b^n \mid n \geq 0\}\); unique trees.

- Dyck Languages \(D(k)\): Well-nested brackets of \(k\) types; grammar with \(S \to \epsilon | SS | \langle_n S \rangle_n\).

- Reachable/Generating: Symbol reachable if \(S \overset{*}{\implies} \alpha X \beta\); non-terminal generating if derives some \(y \in \Sigma^*\).

- Pruned CFG: All non-terminals reachable and generating.

Weighted Context-Free Grammars (WCFG)

- Definition: 5-tuple \((\Sigma, N, S, P, W)\); \(W: P \to \mathbb{R}\).

- Tree Weight: \(w(d) = \prod_{(X \to \alpha) \in d} W(X \to \alpha)\).

- Stringsum: \(G(y) = \sum_{d \in D_G(y)} w(d)\).

- Allsum (Non-terminal \(Y\)): \(Z(G, Y) = \sum_{d \in D_G(Y)} w(d)\); terminals \(Z(a)=1\); \(Z(G)=Z(G,S)\); normalizable if finite.

- Probabilistic CFG (PCFG): Weights \(\geq 0\); \(\sum_{X \to \alpha} W(X \to \alpha)=1\) per \(X\).

- Context-Free LM: \(p_{LM}\) context-free if \(L(p_{LM}) = L(G)\) for some WCFG.

- Tree/String Prob in PCFG: Tree prob = weight; string prob \(p_G(y) = G(y)\).

- String Prob in WCFG: \(p_G(y) = G(y)/Z(G)\).

- Induced LM: \(p_{LM^G}(y) = p_G(y)\).

Tightness of Context-Free Language Models

- GN always tight.

- Generation Level: \(\gamma_0 = S\); \(\gamma_l =\) apply all productions to non-terminals in \(\gamma_{l-1}\).

- Production Generating Function: For \(X_n\), \(g_n(s_1,\dots,s_N) = \sum_{X_n \to \alpha} W(X_n \to \alpha) s_1^{r_1(\alpha)} \cdots s_N^{r_N(\alpha)}\) (\(r_m(\alpha)=\) occurrences of \(X_m\) in \(\alpha\)).

- Generating Function: \(G_0(s_1,\dots,s_N)=s_1\) (for \(S=X_1\)); \(G_l(s_1,\dots,s_N) = G_{l-1}(g_1(s),\dots,g_N(s))\).

- \(G_l = D_l(s) + C_l\); \(C_l =\) prob of terminating in \(\leq l\) levels.

- Lemma: PCFG tight iff \(\lim_{l \to \infty} C_l =1\).

- Proof: Limit is prob of finite strings; <1 implies non-zero infinite prob.

- First-Moment Matrix: \(E_{nm} = \partial g_n / \partial s_m |_{s=1} = \sum_{X_n \to \alpha} W(X_n \to \alpha) r_m(\alpha)\) (expected occurrences of \(X_m\) from \(X_n\)).

- Theorem: PCFG tight if \(|\lambda_{\max}(E)| <1\); non-tight if \(>1\).

- Proof: Coeff of \(s_1^{r_1} \cdots s_N^{r_N}\) in \(G_l\) is prob of \(r_m\) \(X_m\) at level \(l\). Expected \(\vec{r}_l = \vec{r}_0 E^l = [1,0,\dots,0] E^l\). Tight iff \(\lim \vec{r}_l =0\) iff \(\lim E^l=0\) iff \(|\lambda_{\max}|<1\) (diverges if \(>1\)).

- MLE-trained WCFG always tight (Chi, 1999).

Normalizing Weighted Context-Free Grammars (WCFG)

- To use a WCFG as a language model, compute stringsum \(G(y)\) and allsum \(Z(G)\) for normalization.

- Allsum algorithm derives solutions to nonlinear equations (beyond scope; see Advanced Formal Language Theory for details).

- Theorem (Smith and Johnson, 2007): Normalizable WCFG with non-negative weights and tight probabilistic context-free grammars (PCFG) are equally expressive.

- Proof (\(\Leftarrow\)): Tight PCFG is WCFG with \(Z(G)=1\); trivial.

- (\(\Rightarrow\)): Let \(G_G = (\Sigma, N, S, P, W)\) be pruned normalizable non-negative WCFG. Construct \(G_L = (\Sigma, N, S, P, W_L)\) with same structure.

- \(W_L(X \to \alpha) = W(X \to \alpha) \cdot \prod_{Y \in \alpha} Z(G, Y) / Z(G, X)\) (reweight using allsums).

- Non-negative (inherits from \(W\)).

- Sums to 1 per \(X\): By allsum definition \(Z(G, X) = \sum_{X \to \alpha} W(X \to \alpha) \prod_{Y \in \alpha} Z(G, Y)\).

- Path/tree probs match: In product over tree, internal allsums cancel; leaves \(w(d)/Z(G)\).

- Equivalence via reweighting preserves language.

Single-Stack Pushdown Automata

- CFGs generate languages; pushdown automata (PDA) recognize them (decide membership).

- PDA extend FSA with a stack for unbounded memory.

- Definition: PDA \(\mathcal{P} = (\Sigma, Q, \Gamma, \delta, (q_\iota, \gamma_\iota), (q_\varphi, \gamma_\varphi))\), where:

- \(\Sigma\): Input alphabet.

- \(Q\): Finite states.

- \(\Gamma\): Stack alphabet.

- \(\delta \subseteq Q \times \Gamma^* \times (\Sigma \cup \{\epsilon\}) \times Q \times \Gamma^*\): Transition multiset.

- \((q_\iota, \gamma_\iota)\): Initial configuration (\(q_\iota \in Q\), \(\gamma_\iota \in \Gamma^*\)).

- \((q_\varphi, \gamma_\varphi)\): Final configuration.

- Stack as string \(\gamma = X_1 \cdots X_n\) (\(\Gamma^*\); \(X_1\) bottom, \(X_n\) top; \(\emptyset\) empty).

- Configuration: Pair \((q, \gamma)\), \(q \in Q\), \(\gamma \in \Gamma^*\).

- Transition \(q \xrightarrow{a, \gamma_1 \to \gamma_2} r\): From \(q\), read \(a\) (or \(\epsilon\)), pop \(\gamma_1\) from top, push \(\gamma_2\); go to \(r\).

- Scanning: Transition scans \(a\) if reads \(a\); scanning if \(a \neq \epsilon\), else non-scanning.

- Moves: If configs \((q_1, \gamma \gamma_1)\), \((q_2, \gamma \gamma_2)\), and transition \(q_1 \xrightarrow{a, \gamma_1 \to \gamma_2} q_2\), write \((q_1, \gamma \gamma_1) \implies (q_2, \gamma \gamma_2)\).

- Run: Sequence \((q_0, \gamma_0), t_1, (q_1, \gamma_1), \dots, t_n, (q_n, \gamma_n)\) where each step follows a transition \(t_i\); accepting if starts initial, ends final.

- Scans \(y = a_1 \cdots a_n\) if transitions scan \(a_i\)’s.

- Sets: \(\Pi(\mathcal{P}, y) =\) accepting runs scanning \(y\); \(\Pi(\mathcal{P}) =\) all accepting runs.

- Recognition: PDA recognizes \(y\) if \(\Pi(\mathcal{P}, y) \neq \emptyset\).

- Language: \(L(\mathcal{P}) = \{y \mid \Pi(\mathcal{P}, y) \neq \emptyset\}\).

- Deterministic PDA: No \(\epsilon\)-transitions reading input; at most one transition per \((q, a, \gamma)\); no \(\epsilon\) if input transition exists.

- Non-deterministic PDA more expressive; recognize exactly CFL (Theorem 4.2.3; Sipser, 2013).

Weighted Pushdown Automata

- Definition (WPDA): As PDA but \(\delta \subseteq Q \times \Gamma^* \times (\Sigma \cup \{\epsilon\}) \times Q \times \Gamma^* \times \mathbb{R}\).

- Finite actions (multiset).

- Run Weight: \(w(\pi) = \prod_{i=1}^n w_i\) over transition weights \(w_i\).

- Stringsum: \(\mathcal{P}(y) = \sum_{\pi \in \Pi(\mathcal{P}, y)} w(\pi)\).

- Recognizes \(y\) with weight \(\mathcal{P}(y)\).

- Weighted Language: \(L(\mathcal{P}) = \{(y, \mathcal{P}(y)) \mid y \in \Sigma^*\}\).

- Allsum: \(Z(\mathcal{P}) = \sum_{\pi \in \Pi(\mathcal{P})} w(\pi)\); normalizable if finite.

- Probabilistic PDA (PPDA): Weights \(\geq 0\); for each config \((q, \gamma)\), sum to 1 over transitions with pop prefix of \(\gamma\).

- Theorem: CFL iff PDA-recognizable; PCFG-generated iff PPDA-recognizable (Abney et al., 1999).

- Theorem: Globally normalized WPDA can be locally normalized (to equivalent PPDA).

- Proof: Convert to WCFG, locally normalize to PCFG (Theorem 4.2.2), convert back to PPDA (Abney et al., 1999; Butoi et al., 2022).

Pushdown Language Models

- Definition: Language model \(p_{LM}\) is pushdown if \(L(p_{LM}) = L(\mathcal{P})\) for some WPDA.

- Equivalent to context-free LM for single-stack (by PDA-CFG equivalence).

- Induced LM: \(p_{LM^{\mathcal{P}}}(y) = \mathcal{P}(y) / Z(\mathcal{P})\).

Multi-Stack Pushdown Automata

- Extend to multiple stacks; 2-stack suffices for max expressivity.

- Definition (2-PDA): \(\mathcal{P} = (\Sigma, Q, \Gamma_1, \Gamma_2, \delta, (q_\iota, \gamma_{\iota1}, \gamma_{\iota2}), (q_\varphi, \gamma_{\varphi1}, \gamma_{\varphi2}))\); \(\delta \subseteq Q \times \Gamma_1^* \times \Gamma_2^* \times (\Sigma \cup \{\epsilon\}) \times Q \times \Gamma_1^* \times \Gamma_2^*\).

- Configurations \((q, \gamma_1, \gamma_2)\); runs/acceptance analogous.

- 2-WPDA: Add \(\mathbb{R}\) to \(\delta\) for weights.

- Probabilistic 2-PDA (2-PPDA): Weights \(\geq 0\); sum to 1 per config over transitions with pop prefixes.

- Theorem: 2-PDA Turing complete (simulate TM tape with stacks; Theorem 8.13, Hopcroft et al., 2006).

- Thus, weighted/probabilistic 2-PDA Turing complete; recognize distributions over recursively enumerable languages.

- Theorem: Tightness of 2-PPDA undecidable.

- Proof: Tight iff halts with prob 1 on inputs (measure on \(\Sigma^* \cup \Sigma^\infty\)). Simulate TM; equivalent to TM halting with prob 1 (halting problem variant, undecidable).

- Example: Poisson over \(\{a^n \mid n \geq 0\}\) not context-free (Icard, 2020).

Neural Network Language Models

Recurrent Neural Networks (RNNs)

Sequential Processing and Infinite Context

- RNNs are designed to process sequences one element at a time, maintaining a hidden state that summarizes all previous inputs.

- They can, in theory, make decisions based on an unbounded (potentially infinite) context.

Turing Completeness

- RNNs are Turing complete under certain assumptions (e.g., infinite precision, unbounded computation time), meaning they can simulate any computation a Turing machine can perform.

Tightness

- The concept of “tightness” for language models (LMs) is important for ensuring that the probability mass does not “leak” to infinite-length sequences.

- We will analyze under what conditions RNN-based LMs are tight.

Human Language is Not Context-Free

- Human languages exhibit phenomena (e.g., cross-serial dependencies in Swiss German) that cannot be captured by context-free grammars (CFGs).

- Example: In Swiss German, certain dependencies between verbs and their arguments “cross” in a way that CFGs cannot model.

- These dependencies require more expressive power than CFGs provide.

- RNNs, due to their flexible and potentially infinite state space, can model such dependencies.

- Human languages exhibit phenomena (e.g., cross-serial dependencies in Swiss German) that cannot be captured by context-free grammars (CFGs).

RNNs as State Transition Systems

- RNNs can be viewed as systems that transition between (possibly infinitely many) hidden states.

- The hidden state in an RNN is analogous to the state in a finite-state automaton (FSA), but in language modeling, we are typically interested in the output probabilities, not the hidden state itself.

Formal Definition: Real-Valued RNN

- A real-valued RNN is a 4-tuple \((\Sigma, D, f, h_0)\):

- \(\Sigma\): Alphabet of input symbols.

- \(D\): Dimension of the hidden state vector.

- \(f: \mathbb{R}^D \times \Sigma \to \mathbb{R}^D\): Dynamics map (transition function).

- \(h_0 \in \mathbb{R}^D\): Initial hidden state.

- Rational RNNs are defined analogously, with hidden states in \(\mathbb{Q}^D\).

- In practice, RNNs use floating-point numbers, so they are rational-valued.

- Defining RNNs over \(\mathbb{R}\) is useful for theoretical analysis (e.g., differentiability for gradient-based learning).

- A real-valued RNN is a 4-tuple \((\Sigma, D, f, h_0)\):

RNNs as Encoders in Language Modeling

- RNNs are used to compute an encoding function \(\mathrm{enc}_{\mathcal{R}}\), which produces the hidden state for a given input prefix: \[ \mathbf{h}_t = \mathrm{enc}_{\mathcal{R}}(\mathbf{y}_{<t+1}) \overset{\mathrm{def}}{=} \mathbf{f}(\mathrm{enc}_{\mathcal{R}}(\mathbf{y}_{<t}), y_t) \in \mathbb{R}^D \]

- This encoding is then used in a general language modeling framework (see Chapter 3), typically with an output matrix \(E\) to define a sequence model (SM).

One-Hot Encoding Notation

- For a symbol \(y \in \Sigma\), the one-hot encoding is: \[

[y] \overset{\mathrm{def}}{=} \mathbf{d}_{n(y)}

\]

- Here, \(n(y)\) is the index of \(y\) in the alphabet, and \(\mathbf{d}_{n(y)}\) is the corresponding basis vector.

- For a symbol \(y \in \Sigma\), the one-hot encoding is: \[

[y] \overset{\mathrm{def}}{=} \mathbf{d}_{n(y)}

\]

Activation Functions

- An activation function in an RNN operates elementwise on the hidden state vector.

- Common choices: \(\tanh\), sigmoid, ReLU.

General Results on Tightness

- Translation from Chapter 3

- The tightness results for general LMs are now applied to RNNs with softmax output.

- Tightness Condition for Softmax RNNs

- A softmax RNN sequence model is tight if, for all time steps \(t\), \[

s \| h_t \|_2 \leq \log t

\]

- \(s = \max_{y \in \Sigma} \| e(y) - e(\text{EOS}) \|_2\)

- \(e(y)\) is the embedding of symbol \(y\); EOS is the end-of-sequence symbol.

- A softmax RNN sequence model is tight if, for all time steps \(t\), \[

s \| h_t \|_2 \leq \log t

\]

- Bounded Dynamics Implies Tightness

- Theorem: RNNs with a bounded dynamics map \(f\) are tight.

- If \(|f(x)_d| \leq M\) for all \(d\) and \(x\), then the norm of the hidden state is bounded.

- All RNNs with bounded activation functions (e.g., \(\tanh\), sigmoid) are tight.

- RNNs with unbounded activation functions (e.g., ReLU) may not be tight.

- Example: If the hidden state grows linearly with \(t\), the model is not tight.

- Theorem: RNNs with a bounded dynamics map \(f\) are tight.

Elman and Jordan Networks

Elman Network (Vanilla RNN)

- One of the earliest and simplest RNN architectures, originally used for sequence transduction.

- Dynamics Map: \[

\mathbf{h}_t = \sigma \left( \mathbf{U} \mathbf{h}_{t-1} + \mathbf{V} e(y_t) + \mathbf{b} \right)

\]

- \(\sigma\): Elementwise nonlinearity (e.g., \(\tanh\), sigmoid).

- \(e(y_t)\): Static symbol embedding (can be learned or fixed).

- \(\mathbf{U}\): Recurrence matrix (applies to previous hidden state).

- \(\mathbf{V}\): Input matrix (applies to input embedding).

- \(\mathbf{b}\): Bias vector.

- Notes:

- \(e(y)\) can be learned directly, so \(\mathbf{V}\) is theoretically superfluous, but allows flexibility (e.g., when \(e\) is fixed).

- The input embedding and output embedding matrices can be different.

Jordan Network

- Similar to Elman, but the hidden state is computed based on the previous output (logits), not the previous hidden state.

- Dynamics Map: \[

\begin{aligned}

&\mathbf{h}_t = \sigma\left(\mathbf{U} \mathbf{r}_{t-1} + \mathbf{V} e'(y_t) + \mathbf{b}_h\right) \\

&\mathbf{r}_t = \sigma_o(\mathbf{E} \mathbf{h}_t)

\end{aligned}

\]

- \(\mathbf{r}_{t-1}\): Transformed output from previous time step.

- \(\mathbf{E}\): Output matrix.

- \(\sigma_o\): Output nonlinearity (often softmax).

- Notes:

- This architecture “feeds back” the output logits into the hidden state.

- Conditional probabilities are computed by applying softmax to the output.

Matrix Naming Conventions

- \(\mathbf{U}\): Recurrence matrix (applies to previous hidden state or output).

- \(\mathbf{V}\): Input matrix (applies to input embedding).

- \(\mathbf{E}\): Output matrix (applies to hidden state to produce logits).

Variations on RNNs

Theoretical Expressiveness vs. Practical Issues

- In theory, simple RNNs (Elman/Jordan) are sufficient to model any computable language.

- In practice, they suffer from:

- Difficulty learning long-term dependencies.

- Vanishing and exploding gradients.

- Limited interaction between input and hidden state (linear, summed transformation at each step).

Gating Mechanisms

- Gating allows the network to control, for each dimension of the hidden state, how much to retain, update, or forget, based on the previous hidden state and current input.

- Gates:

- Real-valued vectors in \([0, 1]^D\).

- Computed by gating functions (typically using sigmoid activations).

- Act as “soft switches” for each hidden state component.

- If a gate value is close to 0, that dimension is “forgotten” (zeroed out after elementwise multiplication).

- Benefits:

- Helps mitigate vanishing gradient problem by allowing gradients to flow more easily.

- Enables selective memory and forgetting.

Most Common Gated RNNs: LSTM and GRU

LSTM (Long Short-Term Memory) [Hochreiter & Schmidhuber, 1997]

- Maintains an additional memory cell \(c_t\).

- Uses input, forget, and output gates to control information flow.

- Equations: \[ \begin{aligned} &i_t = \sigma(U^i h_{t-1} + V^i e'(y_t) + b^i) \\ &f_t = \sigma(U^f h_{t-1} + V^f e'(y_t) + b^f) \\ &o_t = \sigma(U^o h_{t-1} + V^o e'(y_t) + b^o) \\ &g_t = \tanh(U^g h_{t-1} + V^g e'(y_t) + b^g) \\ &c_t = f_t \odot c_{t-1} + i_t \odot g_t \\ &h_t = o_t \odot \tanh(c_t) \end{aligned} \]

- \(i_t\): Input gate, \(f_t\): Forget gate, \(o_t\): Output gate, \(g_t\): Candidate vector, \(c_t\): Memory cell, \(h_t\): Hidden state.

- LSTMs have significantly more parameters than vanilla RNNs.

GRU (Gated Recurrent Unit)

- Simpler than LSTM, combines input and forget gates into an update gate.

- No separate memory cell.

- Equations: \[ \begin{aligned} &r_t = \sigma(U^r h_{t-1} + V^r e'(y_t) + b^r) \quad \text{(reset gate)} \\ &z_t = \sigma(U^z h_{t-1} + V^z e'(y_t) + b^z) \quad \text{(update gate)} \\ &g_t = \tanh(U^g (r_t \odot h_{t-1}) + V^g e'(y_t) + b^g) \quad \text{(candidate vector)} \\ &h_t = (1 - z_t) \odot g_t + z_t \odot h_{t-1} \end{aligned} \]

- The update gate \(z_t\) determines the mixing proportion between the candidate and previous hidden state.

- The reset gate \(r_t\) controls how much of the previous hidden state to forget.

Parallelizability: The Achilles’ Heel of RNNs

- Inherent Sequentiality

- RNNs process input strictly sequentially: to compute \(h_t\), all previous \(h_{t-1}, h_{t-2}, \ldots, h_0\) must be computed first.

- This serial dependency leads to:

- Slow training times, especially for long sequences.

- Inability to parallelize computation across time steps during training.

- For prediction, to compute the next token given a context, the RNN must process the entire context sequentially.

- By contrast, transformer architectures can parallelize across sequence positions during training (but not during generation, which is inherently sequential for all locally normalized LMs).

Representational Capacity of Recurrent Neural Networks

RNNs and Weighted Regular Languages

Computational Expressivity of Elman RNNs:

- Elman RNNs, also known as vanilla RNNs, are the simplest recurrent architecture and serve as a baseline for understanding RNN capabilities due to their core recurrent update mechanism.

- In practical settings with finite precision arithmetic (like floating-point numbers on computers) and real-time computation (constant number of operations per input symbol), Elman RNNs are equivalent to weighted finite-state automata, meaning they can only recognize regular languages and their weighted variants.

- This limitation arises because finite precision leads to a finite number of possible hidden states, effectively making the RNN behave like a large but finite automaton.

- Key detail: Despite being finite-state, Elman RNNs can represent automata with an exponential number of states relative to the hidden dimension (e.g., \(2^D\) possible binary configurations), providing a compact, learnable parameterization of potentially huge finite-state models.

What Language Models Can Heaviside RNNs Represent?:

- Heaviside RNNs (HRNNs) are Elman RNNs using the Heaviside step function as activation: it outputs 1 if the input is non-negative, else 0, creating binary hidden states.

- HRNNs exactly represent the weighted regular languages defined by deterministic probabilistic finite-state automata (PFSAs), which are locally normalized weighted finite-state automata.

- In other words, any distribution an HRNN computes is regular (can be modeled by a PFSA), and vice versa, any deterministic PFSA can be simulated by an HRNN.

- Limitation: HRNNs cannot represent non-regular languages, such as context-free ones (e.g., balanced parentheses or \(\{a^n b^n | n \in \mathbb{N}\}\)), because their binary states and deterministic transitions keep them within the regular class.

- Practical relevance: Models finite-precision RNNs theoretically; all real-world RNNs (with finite bits) are ultimately regular, though with vast state spaces.

- Proof intuition: From HRNN to PFSA by enumerating finite binary hidden states; from PFSA to HRNN via encoding states into hidden vectors (detailed below).

Intuition Behind the Minsky Construction, Space Complexity, and Implications of Determinism:

- Intuition of Minsky’s Construction:

- To simulate a deterministic PFSA with states \(Q\) and alphabet \(\Sigma\), represent the current state-symbol pair \((q_t, y_t)\) as a one-hot vector in a hidden space of size \(|Q| \cdot |\Sigma|\).

- The recurrence matrix \(U\) has columns that encode the “out-neighborhood” of each state (all possible next states and symbols from \(q\)), repeated for each incoming symbol since the incoming symbol doesn’t affect future transitions.

- The input matrix \(V\) encodes, for each symbol \(y\), all states reachable by \(y\) from any state.

- Bias vector set to -1 enables element-wise conjunction (AND operation) via Heaviside: Adding \(U h_t\) (out-neighborhood) and \(V [y_{t+1}]\) (y-reachable states), then applying Heaviside with bias selects the unique next state due to determinism.

- Output matrix \(E\) encodes transition probabilities: For softmax, use log weights (with \(\log(0) = -\infty\) to exclude impossible transitions); projection yields the same local distributions as the PFSA.

- Overall: Each RNN step simulates one PFSA transition by “looking up” and conjoining possibilities, ensuring the hidden state tracks the PFSA state.

- Space Complexity:

- Hidden dimension \(D = |Q| \cdot |\Sigma|\), linear in both the number of states and alphabet size.

- This is compact compared to explicitly storing a huge automaton graph, as parameters (\(U\), \(V\), \(E\)) are shared and learnable via gradients.

- More efficient constructions exist for unweighted cases: Dewdney achieves \(O(|\Sigma| \cdot |Q|^{3/4})\) using square-root encodings and matrix decompositions; Indyk achieves optimal \(O(|\Sigma| \cdot \sqrt{|Q|})\) for binary alphabets using four-hot encodings and non-decreasing decompositions.

- For weighted (probabilistic) cases, linearity in \(|Q|\) is necessary because independent state distributions require the output matrix to span \(\mathbb{R}^{|Q|}\).

- Alphabet-wise: Generally linear in \(|\Sigma|\) (dense encodings fail for overlapping transitions); logarithmic possible for “log-separable” automata (at most one transition per state pair), achievable via a separation algorithm that expands states to \(O(|Q| \cdot |\Sigma|)\) but allows overall compression to \(O(\log |\Sigma| \cdot \sqrt{|\Sigma| \cdot |Q|})\).

- Implications of Determinism in RNNs:

- RNNs are inherently deterministic: Each input sequence follows a single hidden state path, matching deterministic PFSAs.

- Cannot directly represent non-deterministic PFSAs, which are more expressive and can be exponentially more compact (non-determinism allows ambiguity resolved by weights).

- To simulate non-deterministic ones, must determinize first, potentially causing exponential state explosion (e.g., some PFSAs have probabilities not expressible as single terms without blowup).

- Key detail: Example non-determinizable PFSA assigns probs like \(0.5 \cdot 0.9^n \cdot 0.1 + 0.5 \cdot 0.1^n \cdot 0.9\), requiring choice that determinism can’t replicate efficiently.

- Takeaway: Determinism restricts RNNs to deterministic regular languages; non-determinism in classical models highlights RNN limitations under finite constraints.

- Intuition of Minsky’s Construction:

Example: Deterministic PFSA Encoded via Minsky Construction

We define a small deterministic PFSA:

- Alphabet: \(\Sigma = \{a, b\}\)

- States: \(Q = \{q_0, q_1\}\)

- Start state: \(q_0\)

- Transitions:

| Current state | Symbol | Next state | Probability |

|---|---|---|---|

| \(q_0\) | a | \(q_0\) | 0.7 |

| \(q_0\) | b | \(q_1\) | 0.3 |

| \(q_1\) | a | \(q_0\) | 1.0 |

| \(q_1\) | b | \(q_1\) | 0.0 |

Minsky Construction Parameters:

Hidden dimension:

\[ D = |Q| \times |\Sigma| = 2 \times 2 = 4 \]

Hidden units correspond to \((\text{state}, \text{symbol})\) pairs:

- \((q_0, a)\)

- \((q_0, b)\)

- \((q_1, a)\)

- \((q_1, b)\)

U (out-neighborhoods, \(4 \times 4\)):

\[ U = \begin{bmatrix} 1 & 0 & 1 & 0 \\ 1 & 0 & 1 & 0 \\ 0 & 1 & 0 & 1 \\ 0 & 1 & 0 & 1 \end{bmatrix} \]

V (reachable-by-symbol masks, \(4 \times 2\)):

\[ V = \begin{bmatrix} 1 & 0 \\ 0 & 1 \\ 1 & 0 \\ 0 & 1 \end{bmatrix} \]

Bias:

\[ b = (-1, -1, -1, -1)^\top \]

Activation: Heaviside step function.

Output matrix \(E\) (log-probabilities for \(\Sigma = \{a, b\}\)):

\[ E = \begin{bmatrix} \log 0.7 & \log 0.3 \\ \log 0.7 & \log 0.3 \\ 0 & -\infty \\ 0 & -\infty \end{bmatrix} \]

Rows 1–2 correspond to \(q_0\), rows 3–4 to \(q_1\).

One Update Step: From \((q_0, a)\) with next symbol \(b\)

Initial hidden state \(h_t\) = one-hot for \((q_0, a)\):

\[ h_t = \begin{bmatrix} 1 \\ 0 \\ 0 \\ 0 \end{bmatrix} \]

Next input symbol: \(b\) → one-hot \([y_{t+1}] = (0, 1)^\top\)

Out-neighborhood mask:

\[ U h_t = \begin{bmatrix} 1 \\ 1 \\ 0 \\ 0 \end{bmatrix} \]

From \((q_0, a)\) → next state \(q_0\) → possible next units = \((q_0, a)\) [1], \((q_0, b)\) [2].

Reachable-by-symbol mask for \(b\):

\[ V[b] = \begin{bmatrix} 0 \\ 1 \\ 0 \\ 1 \end{bmatrix} \]

All units with symbol \(b\) are active.

Add masks:

\[ U h_t + V[b] = \begin{bmatrix} 1 \\ 2 \\ 0 \\ 1 \end{bmatrix} \]

Subtract bias:

\[ (U h_t + V[b]) - 1 = \begin{bmatrix} 0 \\ 1 \\ -1 \\ 0 \end{bmatrix} \]

Apply Heaviside: \[ h_{t+1} = \begin{bmatrix} 0 \\ 1 \\ 0 \\ 0 \end{bmatrix} \] New hidden state = \((q_0, b)\).

One Sampling Step from \((q_0, b)\)

Compute logits:

\[ E h_{t+1} = \text{row 2 of } E = (\log 0.7, \log 0.3) \]

Apply softmax:

\[ P(a) = 0.7, \quad P(b) = 0.3 \]

Sample according to this distribution:

- With probability \(0.7\) → emit \(a\)

- With probability \(0.3\) → emit \(b\)

The emitted symbol becomes the next input for the following update step.

Turing Completeness of RNNs

What Needs to Change to Get Turing Completeness?:

- Start from Heaviside RNNs (regular expressivity) and make these changes:

- Activation: Switch to saturated sigmoid, which clips to [0,1] but preserves fractional values in (0,1) for encoding unbounded data.

- Precision: Allow infinite (arbitrary) precision in weights and computations, enabling representation of infinite sequences in fixed dimensions via decimals.

- Time: Permit unbounded computation time per input symbol (non-real-time), allowing multi-step updates to simulate complex operations like stack manipulations.

- These relaxations enable RNNs to simulate unbounded memory structures (e.g., stacks) and thus pushdown automata or Turing machines.

- Key detail: Infinite precision stores entire histories as infinite decimals (e.g., stack 00110111 as 0.33313311); unbounded time allows dividing/multiplying fractions without fixed steps.

- Start from Heaviside RNNs (regular expressivity) and make these changes:

Core Result: Saturated Sigmoid Elman RNNs are Turing Complete:

- They can simulate any Turing machine by encoding two-stack pushdown automata (known to be Turing equivalent).

- Single-Stack Simulation (Recognizes Deterministic Context-Free Languages):

- Stack encoding: Represent sequence as base-10 fraction (e.g., symbols 0/1 as digits 1/3; push by divide by 10 + offset, pop by multiply by 10 - offset).

- Hidden state components (21 dimensions): Data (stack + two buffers for temp copies), top flags (empty, top=0, top=1), configs (top + input symbol combos), computations (try all actions: push0/1, pop0/1, no-op), acceptance flag.

- Update in four phases per symbol (unbounded time): (1) Detect top symbol, (2) combine with input to flag config, (3) compute all actions but zero invalid ones (based on PDA transitions), (4) copy valid result to stack.

- Details: Use matrix multiplications for copying/erasing; saturated sigmoid for detection (e.g., AND via sum-threshold, conditional reset).

- Example: Simulates PDA on “ab” with phase-by-phase hidden state tables, showing stack updates from empty to 0.1 then back to empty.

- Two-Stack Extension: Duplicate data/computation components for second stack; updates handle both independently, simulating two-stack PDA.

- Takeaway: RNNs jump from regular to Turing complete with relaxations, encoding infinite memory in finite dimensions via precision.

Is a Real-Time RNN Turing Complete?:

- No: Real-time RNNs (fixed constant operations per symbol) are limited to regular languages, equivalent to finite-state automata.

- Reason: Cannot perform the unbounded computations needed to manipulate infinite-precision encodings (e.g., arbitrary stack ops) within constant time; hidden states remain effectively finite.

- Key detail: Practical RNNs are real-time, hence regular; Turing completeness strictly requires non-real-time multi-phase updates.

- Takeaway: Time bound is crucial; without it, RNNs are universal, but real-world constraints keep them finite-state.

Computational Power of RNN Variants

- LSTMs: Empirically learn counting (e.g., recognize \(a^n b^n c^n\)); behave like counter machines, crossing Chomsky hierarchy (some context-free and context-sensitive languages, but not all CF like Dyck).

- Detail: Memory cell acts as counter; more expressive than vanilla RNNs/GRUs.

- GRUs and Elman: Rationally recurrent (hidden updates computable by finite-state machines); limited to regular languages.

- LSTMs: Not rationally recurrent, allowing greater expressivity (e.g., counting beyond regular).

- Takeaway: Gating in LSTMs enables mechanisms like counting, surpassing vanilla RNNs but still below full Turing under practical constraints.

Consequences of Turing Completeness

- Turing completeness implies undecidability of key properties: No algorithms exist to determine tightness (prob mass on finite strings =1), find highest-probability string, check equivalence of two RNN LMs, or minimize hidden size while preserving the language model.

- Reason: Reductions from the halting problem (undecidable: Does a Turing machine halt on input?).

- Details: Construct RNNs simulating arbitrary TMs; property holds iff TM halts (e.g., tightness if simulation ends, else mass leaks to infinity).

- Takeaway: While theoretically powerful, RNNs inherit TM undecidability, making tasks like verification or optimization impossible in general.

Transformer-based Language Models

- Transformers were introduced by Vaswani et al. (2017) as an architecture for sequence modeling, originally for machine translation, but widely used in language modeling (e.g., GPT models).